If you’ve worked in software development or IT for any amount of time, chances are you’ve at least heard about containers…and maybe even Kubernetes.

If you’ve worked in software development or IT for any amount of time, chances are you’ve at least heard about containers…and maybe even Kubernetes.

Maybe you’ve heard that Google manages to spin up two billion containers a week to support their various services, or that Netflix runs its streaming, recommendation, and content systems on a container orchestration platform called Titus.

This is all very exciting stuff, but I’m more excited to write and talk about these things now more than ever before, for one simple reason: We are finally at a point where these technologies can make our lives as developers and IT professionals easier!

And even better…you no longer have to be a Google (or one of the other giants) employee to have a practical opportunity to use them.

Containers

Before getting into orchestrators and what they actually offer, let’s briefly discuss the fundamental piece of technology that all of this is depends on – the container itself.

A container is a digital package of sorts, and it includes everything needed to run a piece of software. By “everything,” I mean the application code, any required configuration settings, and the system tools that are normally brought to the table by a computer’s operating system. With those three pieces, you have a digital package that can run a software application in isolation across different computing platforms because the dependencies are included in that package.

And there is one more feature that makes containers really useful – the ability to snapshot the state of a container at any point. This snapshot is called a container “image.” Think of it in the same way you would normally think of a virtual machine image, except that many of the complexities of capturing the current state of a full-blown machine image (state of the OS, consistency of attached disks at the time of the snapshot, etc.) are not present in this snapshot. Only the components needed to run the software are present, so one or a million instances can be spun-up directly from that image, and they should not interfere with each other. These “instances” are the actual running containers.

So why is that important? Well, we’ve just alluded to one reason: Containers can run software across different operating systems (various Linux distributions, Windows, Mac OS, etc.). You can build a package once and run it in many different places. It should seem pretty obvious at this point, but in this way, containers are a great mechanism for application packaging and deployment.

To build on this point, containers are also a great way to distribute your packages as a developer. I can build my application on my development machine, create a container image that includes the application and everything it needs to run, and push that image to a remote location (typically called a container registry) where it can be downloaded and turned into one or more running instances.

I said that you can package everything your container needs to run successfully, but the last point to make is that the nature of the container package gives you a way to enforce a clear boundary for your application and a way to enforce runtime isolation. This feature is important when you’re running a mix of various applications and tools…and you want to make sure a rogue process built or run by someone else doesn’t interfere with the operation of your application.

Container Orchestrators

So containers came along and provided a bunch of great benefits for me as a developer. However, what if I start building an application, and then I realize that I need a way to organize and run multiple instances of my container at runtime to address the expected demand? Or better yet, if I’m building a system comprised of multiple microservices that all need their own container instances running? Do I have to figure out a way to maintain the desired state of this system that’s really a dynamic collection of container instances?

This is where container orchestration comes in. A container orchestrator is a tool to help manage how your container instances are created, scaled, managed at runtime, placed on underlying infrastructure, communicate with each other, etc. The “underlying infrastructure” is a fleet of one or more servers that the orchestrator manages – the cluster. Ultimately, the orchestrator helps manage the complexity of taking your container-based, in-development applications to a more robust platform.

Typically, interaction with an orchestrator occurs through a well-defined API, and the orchestrator takes up the tasks of creating, deploying, and networking your container instances – exactly as you’ve specified in your API calls across any container host (servers included in the cluster).

Using these fundamental components, orchestrators provide a unified compute layer on top of a fleet of machines that allows you to decouple your application from these machines. And the best orchestrators go one step further and allow you to specify how your application should be composed, thus taking the responsibility of running the application and maintaining the correct runtime configuration…even when unexpected events occur.

VIEW OUR AZURE CAPABILITIES

Since 2009, AIS has been working with Azure honing our capabilities and offerings. View the overview of our Azure-specific services and offerings.

Kubernetes

Kubernetes is a container orchestrator that delivers the capabilities mentioned above. (The name “Kubernetes” comes from the Greek term for “pilot” or “helmsman of a ship.”) Currently, it is the most popular container orchestrator in the industry.

Kubernetes was originally developed by Google, based in part on the lessons learned from developing their internal cluster management and scheduling system Borg. In 2014, Google donated Kubernetes to the Cloud Native Computing Foundation (CNCF) which open-sourced the project to encourage community involvement in its development. The CNCF is a child entity of the Linux Foundation and operates as a “vendor-neutral” governance group. Kubernetes is now consistently in the top ten open source projects based on total contributors.

Many in the industry say that Kubernetes has “won” the mindshare battle for container orchestrators, but what gives Kubernetes such a compelling value proposition? Well, beyond meeting the capabilities mentioned above regarding what an orchestrator “should” do, the following points also illustrate what makes Kubernetes stand out:

- The largest ecosystem of self-driven contributors and users of any orchestrator technology facilitated by CNCF, GitHub, etc.

- Extensive client application platform support, including Go, Python, Java, .NET, Ruby, and many others.

- The ability to deploy clusters across on-premises or the cloud, including native, managed offerings across the major public cloud providers (AWS, GCP, Azure). In fact, you can use the SAME API with any deployment of Kubernetes!

- Diverse workload support with extensive community examples – stateless and stateful, batch, analytics, etc.

- Resiliency – Kubernetes is a loosely-coupled collection of components centered around deploying, maintaining and scaling workloads.

- Self-healing – Kubernetes works as an engine for resolving state by converging the actual and the desired state of the system.

Kubernetes Architecture

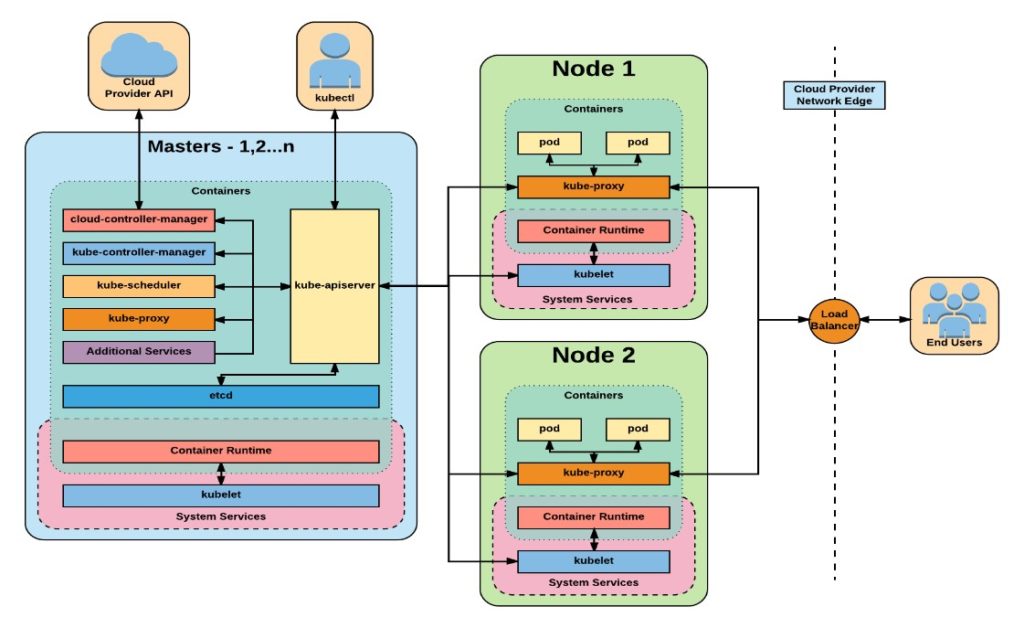

A Kubernetes cluster will always include a “master” and one or more “workers”. The master is a collection of processes that manage the cluster, and these processes are deployed on a master node or multiple master nodes for High Availability (HA). Included in these processes are:

- The API server (Kube-apiserver), a distributed key-store for the persistence of cluster management data (etcd)

- The core control loops for monitoring existing state and management of desired state (Kube-controller-manager)

- The core control loops that allow specific cloud platform integration (Cloud-controller-manager)

- A scheduler component for the deployment of Kubernetes container groups, known as pods (Kube-scheduler)

Worker nodes are responsible for actually running the container instances within the cluster. In comparison, worker nodes are simpler in that they receive instructions from the master and set out serving up containers. On the worker node itself, there are three main components installed which make it a worker node in a Kubernetes cluster: an agent called kubelet that identifies the node and communicates with the master, a network proxy for interfacing with the cluster network stack (kube-proxy), and a plug-in interface that allows kubelet to use a variety of container runtimes, called the container runtime interface.

Image source

Managed Kubernetes and Azure Kubernetes Service

“Managed Kubernetes” is a deceptively broad term that describes a scenario where a public cloud provider (Microsoft, Amazon, Google, etc.) goes a step beyond simply hosting your Kubernetes clusters in virtual machines to take responsibility for deploying and managing your cluster for you. Or more accurately, they will manage portions of your cluster for you. I say “deceptively” broad for this reason – the portions that are “managed” varies by vendor.

The idea is that the cloud provider is:

- Experienced at managing infrastructure at scale and can leverage tools and processes most individuals or companies can’t.

- Experienced at managing Kubernetes specifically, and can leverage dedicated engineering and support teams.

- Can add additional value by providing supporting services on the cloud platform.

In this model, the provider does things like abstracting the need to operate the underlying virtual machines in a cluster, providing automation for actions like scaling a cluster, upgrading to new versions of Kubernetes, etc.

So the advantage for you, as a developer, is that you can focus more of your attention on building the software that will run on top of the cluster, instead of on managing your Kubernetes cluster, patching it, providing HA, etc. Additionally, the provider will often offer complementary services you can leverage like a private container registry service, tools for monitoring your containers in the cluster, etc.

Microsoft Azure offers the Azure Kubernetes Service (AKS), which is Azure’s managed Kubernetes offering. AKS allows full production-grade Kubernetes clusters to be provisioned through the Azure portal or automation scripts (ARM, PowerShell, CLI, or combination). Key components of the cluster provisioned through the service include:

- A fully-managed, highly-available Master. There’s no need to run a separate virtual machine(s) for the master component. The service provides this for you.

- Automated provisioning of worker nodes – deployed as Virtual Machines in a dedicated Azure resource group.

- Automated cluster node upgrades (Kubernetes version).

- Cluster scaling through auto-scale or automation scripts.

- CNCF certification as a compliant managed Kubernetes service. This means it leverages the Cloud-controller-manager standard discussed above, and its implementation is endorsed by the CNCF.

- Integration with supporting Azure services including Azure Virtual Networks, Azure Storage, Azure Role-Based Access Control (RBAC), and Azure Container Registry.

- Integrated logging for apps, nodes, and controllers.

Conclusion

The world of containers continues to evolve, and orchestration is an important consideration when deploying your container-based applications to environments beyond “development.” While not simple, Kubernetes is a very popular choice for container orchestration and has extremely strong community support. The evolution of managed Kubernetes makes using this platform more realistic than ever for developers (or businesses) interested in focusing on “shipping” software.