Recently I was working on a project for a DoD client looking to move multiple, siloed on-premise data workloads to the cloud as part of an Azure capabilities proof of concept. The client needed to upload large amounts of data, create parquet files, perform ad-hoc analysis using jupyter notebooks, and make data available to query using SQL for a reporting dashboard. A single dataset was approximately a terabyte with all the data measured in petabytes.

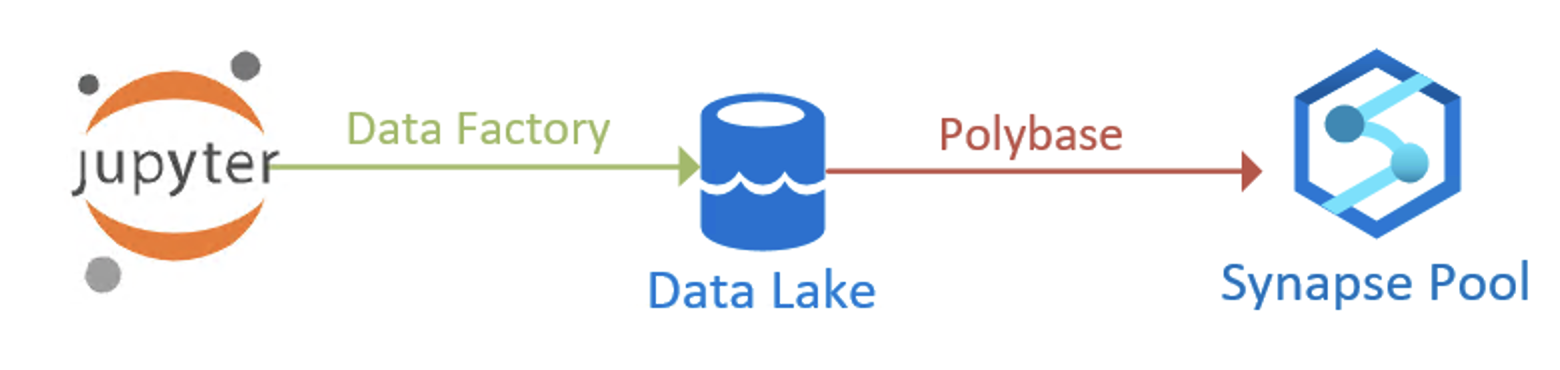

After completing the analysis, we ended up with many files staged in a data lake. Due to the considerable amount of data we were expecting in the future, we didn’t want to pay to store this data twice spread over multiple databases. We opted to take advantage of Azure Synapse and Polybase to directly query parquet files in the data lake using external tables[i]. We ended up with the following data processing flow:

When setting up the parquet files to be queried as an external table, some of them had many fields (200+), which led to numerous errors and quickly became very tedious. In addition, due to the nature of the project, numerous tables were often created. To avoid manually creating the tables, we looked for a solution to automatically create the external tables but could not find an existing solution. So it was up to me to create a simple solution that would work in our current environment without adding additional resources.

The solution was to leverage Data Factories Get Metadata activity[ii] while moving the data into the staging directories. The activity could give us the schema of the parquet files as a JSON string. I then take this JSON schema and pass it to a stored procedure on the synapse pool that would parse the JSON and insert the fields into a table I could use later:

WITH Fields (fieldOrder, fieldName, fieldType) AS (

SELECT

[ key ] AS fieldOrder,

JSON_VALUE([ value ], 'strict $.name') AS fieldName,

JSON_VALUE([ value ], 'strict $.type') AS fieldType

FROM

OPENJSON(@ schema)

)

INSERT INTO

tables_to_create(

tableName,

fieldOrder,

fieldName,

fieldType,

executeTime

)

SELECT

@tableName,

fieldOrder,

fieldName,

fieldType,

translatedType = CASE

WHEN fieldType = 'Single' THEN 'real'

WHEN fieldType = 'Boolean' THEN 'bit'

WHEN fieldType = 'Double' THEN 'float'

WHEN fieldType = 'Int64' THEN 'bigint'

ELSE NULL

END

@ExecTime

FROM

Fields

Then build a SQL command to check the existence of the table and then create it if it doesn’t exist:

SET

@sqlCommand = 'IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N''[dbo].[' + @tableName + ']'') AND type in (N''U''))

CREATE EXTERNAL TABLE [dbo].[' + @tableName + '] ('

WHILE((SELECT COUNT(*) FROM tables_to_create WHERE executeTime = @ExecTime) > 0)

BEGIN

DECLARE @key int

SELECT

@key = MIN(fieldOrder)

FROM

tables_to_create

WHERE

executeTime = @ExecTime

DECLARE @fieldName VARCHAR(50)

DECLARE @translatedType VARCHAR(50)

SELECT

@fieldName = fieldName,

@translatedType = translatedType

FROM

tables_to_create

WHERE

fieldOrder = @key

AND executeTime = @ExecTime

SET

@sqlCommand = @sqlCommand + '

[' + @fieldName + '] [' + @translatedType + '] NULL'

DELETE FROM

tables_to_create

WHERE

fieldOrder = @key

AND executeTime = @ExecTime

IF((SELECT COUNT(*) FROM tables_to_create WHERE executeTime = @ExecTime) > 0)

SET

@sqlCommand = @sqlCommand + ', '

END

SET

@sqlCommand = @sqlCommand + '

)

WITH

(

LOCATION = ''/' + @folderPath + ''',

DATA_SOURCE = DataLakeStaged,

FILE_FORMAT = StagedParquet

)'

EXEC(@sqlCommand)

This frees up the analyst from needing to manually create the external tables and know the mapping in the data factory to point to the correct location on the data lake. The analysts need to worry about making sure the name and path conventions we set up for syncing don’t land different schemas in the same folder.