As AI (Artificial Intelligence) and machine learning continue to make waves in various industries, security and data protection are among the top concerns for organizations. Some of the conversations spark debates, and we hear a lot of questions about them in the context of Azure OpenAI. In this blog post, we’re going to share some of those top questions and give you our insights on how to tackle them.

Our aim is to give you a solid grasp of what Azure OpenAI offers in terms of security. That way, you can make informed choices to minimize risks as you move forward. We’ll be covering important topics like how to deploy Azure OpenAI securely, answering questions we often get asked, and tips to get you started on the right foot.

What is Azure OpenAI?

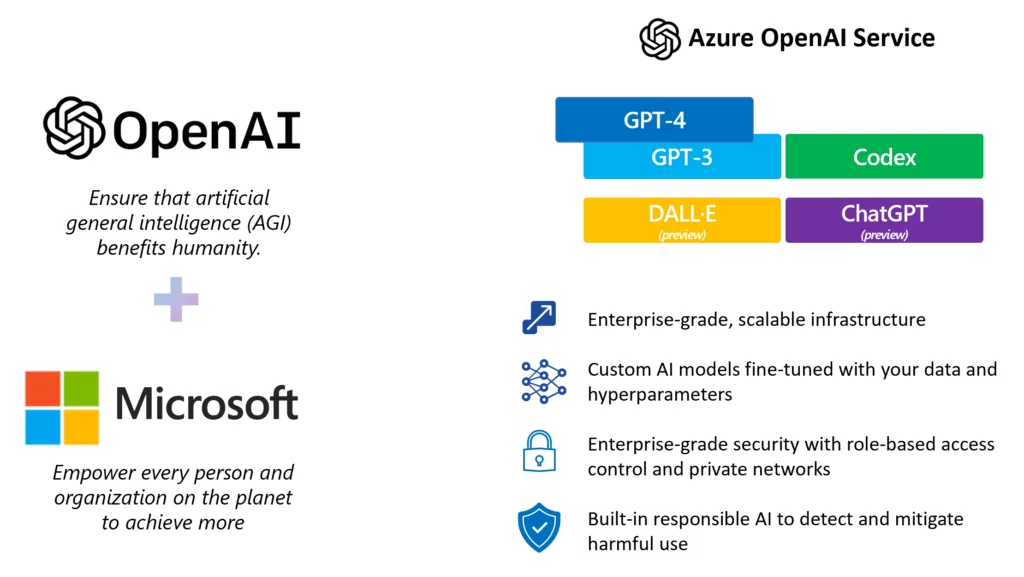

Azure OpenAI Service, a partnership between Microsoft Azure and OpenAI, offers REST API access to advanced language models like GPT-4 and GPT-3.5-Turbo. This cloud-based platform simplifies AI integration into various applications, with use cases from content generation to natural language translation. Designed for scalability and security, the service meets enterprise standards and can be accessed via REST APIs, Python SDK, or Azure OpenAI Studio’s web interface. For more information, explore Azure OpenAI and Microsoft’s Security Services. Learn more here about Azure Open AI from Microsoft.

Figure 1: Source

Azure OpenAI Security Q&A

1. What Separates Azure OpenAI Security from Other OpenAI Services?

Azure OpenAI sets itself apart in the realm of security through its seamless integration with Microsoft’s robust security services and adherence to industry best practices. This integration offers a multitude of significant advantages, including:

- Security Ecosystem: Seamlessly integrates OpenAI models with Azure’s suite of security tools like Azure Security Center, Azure Active Directory, and Azure Key Vault.

- Compliance Standards: Adherence to global and industry-specific standards including ISO 27001, HIPAA, and FedRAMP.

- Role-Based Access Control (RBAC): Fine-grained control for authorizing personnel at various levels within an organization.

- Content Controls: Enables customizable content filtering and abuse monitoring to tailor data processing restrictions.

- Data Confidentiality: Assurance that customer data is not used for model training, maintaining its integrity.

- Data Privacy: Utilizes data isolation techniques to ensure customer data remains inaccessible to other Azure users.

- IP Liability Coverage: Safeguards against intellectual property risks, providing an additional layer of security.

2. Is My Data Shared with Others?

No, Microsoft does not share your Azure OpenAI data with others. The service follows Microsoft’s Responsible AI framework along with strict security requirements, ensuring that your data stays within your designated Azure environment. Additionally, Azure OpenAI supplies isolated network security, which segregates each client’s data and applications to prevent cross-contamination.

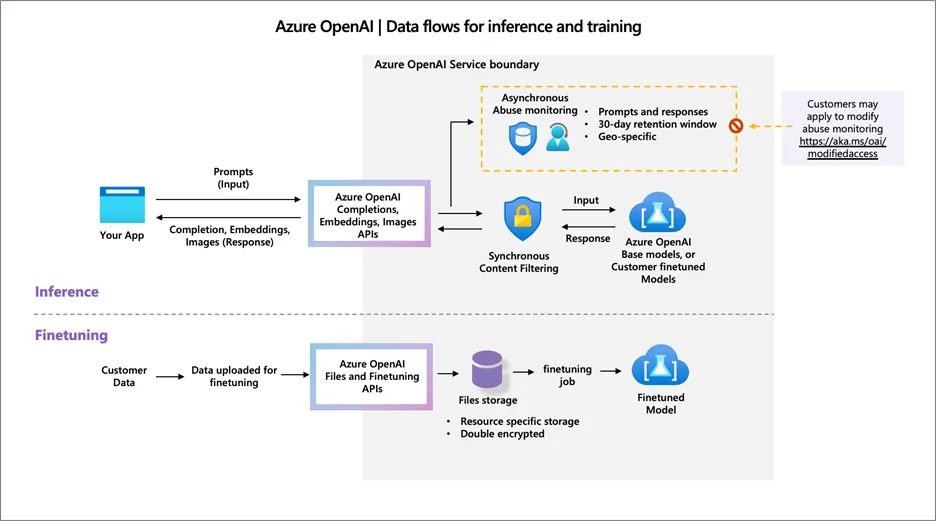

3. Does Microsoft Use My Data to Train Models?

No, your data is not used to train or improve Microsoft’s products or any third-party services. Using a pre-trained language model has an added security advantage: it reduces the need for extensive data manipulation. This limits the exposure of your data and supports the goal of providing strong security protocols.

Figure 2: Source

4. Is My Data Encrypted?

Yes, Azure OpenAI, like other Azure services, employs FIPS 140-2 compliant 256-bit AES encryption as a default data protection measure. This automatic encryption process eliminates the need for user intervention. Additional controls include custom key management options and integration with Azure Key Vault.

5. How Can I Control Access to Sensitive Data?

When it comes to access control, Azure OpenAI is mirroring the security protocols of other Microsoft services. We highly recommend adopting the principle of ‘least privilege’ when setting up user permissions and enabling multi-factor authentication (MFA) and role-based access control (RBAC). These layers of security not only enhance data protection but also offer more granular control over who gets to access what.

6. Can I Keep My Data Within Specific Azure Regions?

Yes, Azure’s data residency capabilities allow you to specify the geographic region to process and store your data. This is especially beneficial for organizations that need to comply with governmental standards such as FedRAMP and CMMC, which mandate that data be kept within U.S. regions.

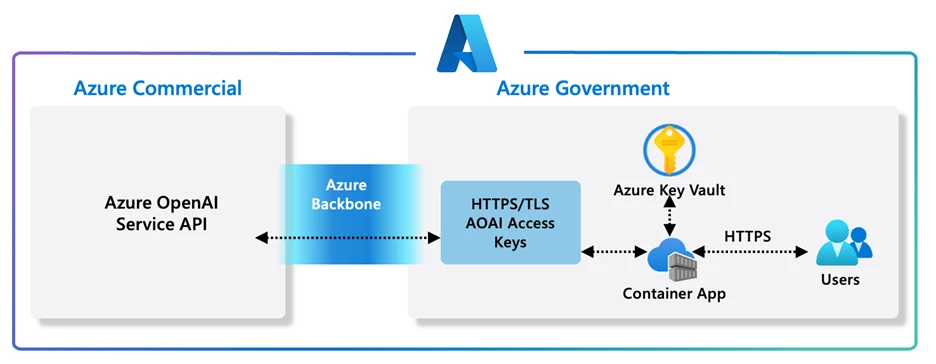

7. Is Azure OpenAI Approved for Government Use?

Yes, the Azure OpenAI service has achieved a FedRAMP High P-ATO in US Commercial regions. This means that it can meet the specific security requirements of US federal agencies and the Department of Defense (DoD) for certain data classifications. Government clients can opt to create a secure link between Azure Government and Azure OpenAI’s Commercial Service. See the reference Architecture for more info on how this connection works.

For further information on AIS’ methodology for securing and accrediting these connections in compliance with government standards, please do not hesitate to contact us.

Figure 3: Access and reference architecture

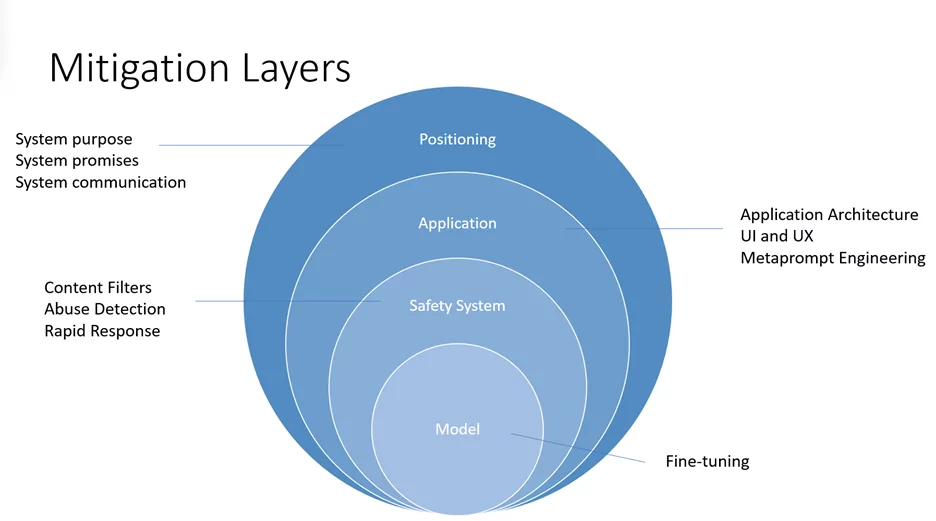

8. How Does Microsoft Ensure Ethical AI Practices?

Microsoft adheres to Responsible AI principles, focusing on fairness, transparency, accountability, and ethical governance. These guidelines are in close alignment with the NIST AI Risk Management Framework and are ingrained into the platform, providing an extra layer of confidence and assurance.

Figure 4: Mitigation Layers

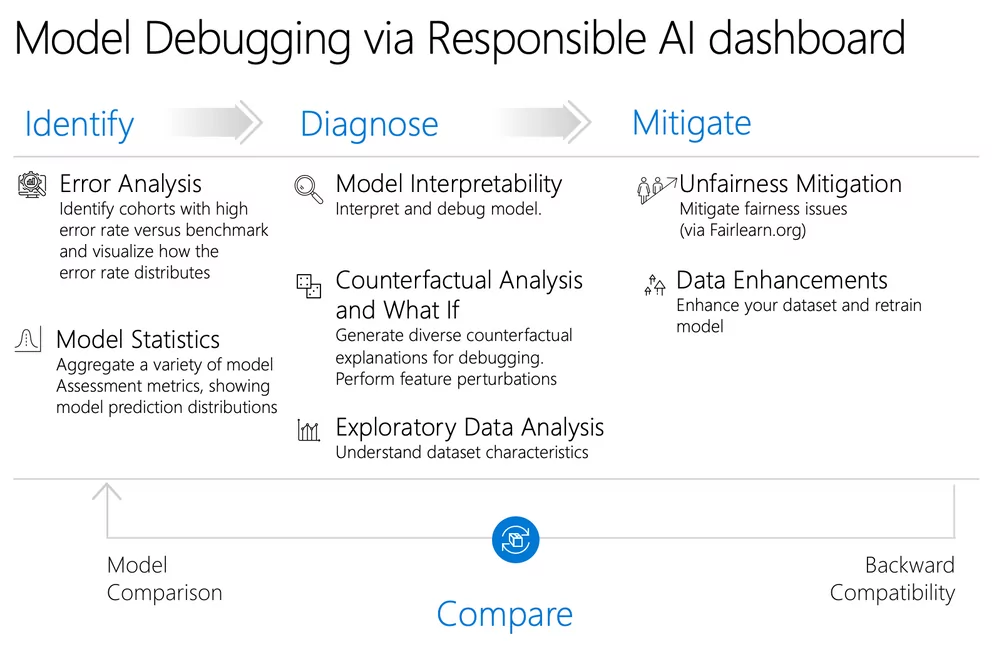

Check out the overview of Responsible AI practices for Azure OpenAI models and how you can assess your own approach using Microsoft’s Responsible AI Maturity Model and the Responsible AI Dashboard tools.

Figure 5: Source

9. How Do Content Filters Enhance Data Security?

Azure OpenAI’s content filters play a crucial role in data security by categorizing risky content, such as violence and hate speech. They aid in legal compliance, ensure user safety, and maintain data integrity. Additionally, these filters are customizable, giving you the freedom to adjust them based on your specific needs and internal policies. In essence, using these filters enhances both your AI deployment and your overall data security.

10. What Logging Options Are Available in Azure OpenAI?

Azure OpenAI offers extensive security logging, including user activity tracking, API call logs, and data monitoring. It supports incident analysis, complies with major standards, and allows real-time alerts, analytics integration via Azure Monitor and Power BI, and custom log retention policies. Additionally, the service provides a Bring Your Own Data (BYOD) feature for enhanced data control and tailored security configurations.

11. Logging vs. Bring Your Own Data (BYOD) in Azure OpenAI: What’s the Difference?

You can use Azure OpenAI logging and Bring Your Own Data (BYOD) together. Logging helps track and monitor AI service usage, providing insights into user activity and security. BYOD lets you bring your own data and customize AI models. These features complement each other, enabling detailed logging for compliance and security, as well as the flexibility to use your own data for custom AI applications. Logging offers audit trails, incident response, accountability, and real-time alerts for enhanced compliance and security. BYOD provides data control, customization, privacy, encryption, custom security protocols, and data classification tailored to specific organizational needs.

12. Can You Opt Out of Microsoft’s Logging and Human Review Process?

Yes, if you’re processing sensitive or regulated data and assess the risk of harmful output to be low, Microsoft allows qualified customers to disable abuse monitoring and human review on Azure OpenAI. Upon approval, this ensures your data isn’t stored for additional review, aligning with your compliance requirements. For further details, refer to Microsoft’s Abuse monitoring page for more information.

Tips for a Secure Deployment

Drawing on our experience deploying secure Azure OpenAI solutions, we offer the following recommendations to guide your AI security journey:

- Establish Benchmarks: Begin by aligning your project with established security frameworks and compliance standards at an early stage.

- Classify Data: Implement a systematic approach to categorize data in accordance with governance protocols, ensuring proper handling and protection.

- Develop Policies: Formulate comprehensive AI governance rules and policies that cover the entire lifecycle of your AI solutions.

- Deploy a Secure Baseline: Make full use of Microsoft’s security features, encompassing Identity and Access Management (IDAM), encryption, and network security, to establish a strong security foundation.

- Continuous Monitoring: Maintain ongoing vigilance by conducting regular risk assessments and proactively mitigating potential threats.

- Create a Governance Group: Create a dedicated governance committee focused on security to oversee and guide your AI initiatives.

- Effective Communication: Foster a culture of security by transparently sharing your security processes and standards throughout your organization.

- Implement Automated Controls: Embrace Infrastructure as Code (IaC) practices to automate and streamline security controls, enhancing efficiency and consistency.

- Integration with Organizational Change: Seamlessly align security considerations with your organizational change procedures, especially in the context of AI implementation and evolution.

By adhering to these best practices, you can significantly enhance the security posture of your Azure OpenAI deployments and ensure a robust and compliant AI ecosystem.

Summary

Azure OpenAI offers you the benefits of both worlds — cutting-edge AI technology housed within a secure and compliant framework. This gives you not just a technological edge, but also the peace of mind that comes with the ability to deploy strong security controls.

Interested in learning more? For tailored solutions on securely deploying Azure OpenAI services and developing use cases to address your business goals, contact us to learn more about our security and AI services.