AI is changing the game—driving innovation, efficiency, new revenue streams, and more intelligent decision-making. But with all that power comes a significant security risk: data over-sharing.

AI systems process massive amounts of information, and without the proper safeguards, sensitive data can slip through the cracks—leading to security breaches, compliance violations, and unintended exposure of critical information.

So, how do you embrace AI’s full potential without opening the floodgates to data leaks?

The Real Risk: AI Isn’t Creating the Problem—It’s Exposing It Faster

One of the biggest misconceptions about AI security is that AI itself is the risk. In truth, AI is simply exposing gaps that have always existed. The difference is speed and scale.

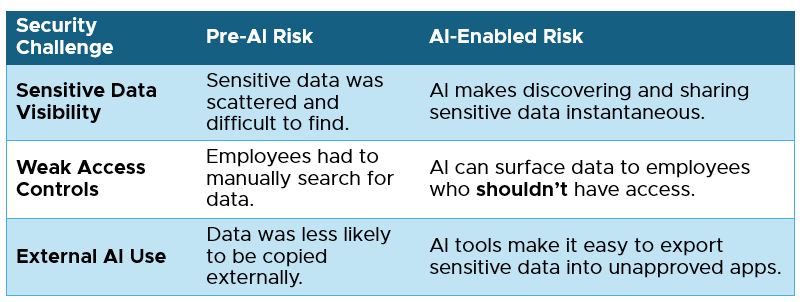

Here’s how AI is accelerating pre-existing security challenges:

Without the right safeguards, these risks can lead to compliance violations, regulatory fines, data privacy issues, and loss of customer trust.

Data Security Starts with Visibility and Control

For most organizations, AI security comes down to two fundamental questions:

- Do you know what data is truly sensitive? Many companies lack a clear inventory of their critical data, making security policies difficult to enforce.

- Can you see how AI is using and sharing that data? Without real-time monitoring, businesses have little control over what AI is surfacing or where sensitive data is going.

With AI adoption accelerating, security strategies must evolve just as quickly.

Case Study: Securing a Non-Profit’s Data Before AI Adoption

A non-profit was eager to roll out Microsoft Copilot and build its AI strategy—but first, they had to secure their data. They quickly realized gaps in visibility, compliance, and protection that could put sensitive information at risk.

AIS stepped in to help. Using Microsoft Purview, we assessed their data security controls, identified vulnerabilities, and provided a clear roadmap for both immediate fixes and long-term AI security enhancements.

The best part? The entire assessment was fully funded through Microsoft’s Cybersecurity Investment Program, giving them expert guidance and a tailored security strategy—at no cost.

Key Challenges We Found

- Scattered, unprotected sensitive data – Personal information, financial records, and internal documents lacked classification and security controls.

- Ineffective DLP policies – Existing rules weren’t preventing data leaks or unauthorized sharing.

- No insider threat monitoring – Risky user behavior and unauthorized access went undetected.

- No data lifecycle management – Sensitive files were kept indefinitely, increasing security risks.

How AIS Helped

- Classified and protected sensitive data using Microsoft Purview, applying automated security policies.

- Enabled real-time risk monitoring across Microsoft 365 data sources.

- Activated insider threat detection to flag suspicious access and potential data leaks.

The Result: Real-Time Visibility & Control of Their Sensitive Data

With a structured security strategy in place, the non-profit now has real-time visibility into how AI interacts with their sensitive data—along with a clear roadmap for strengthening security as AI adoption expands. Built on the foundation of Microsoft Purview, they can now continuously enhance controls to:

- Detect sensitive data exposure before it becomes a breach.

- Automatically adjust access policies based on AI-driven insights.

- Proactively enforce compliance requirements rather than reacting to security incidents after they happen.

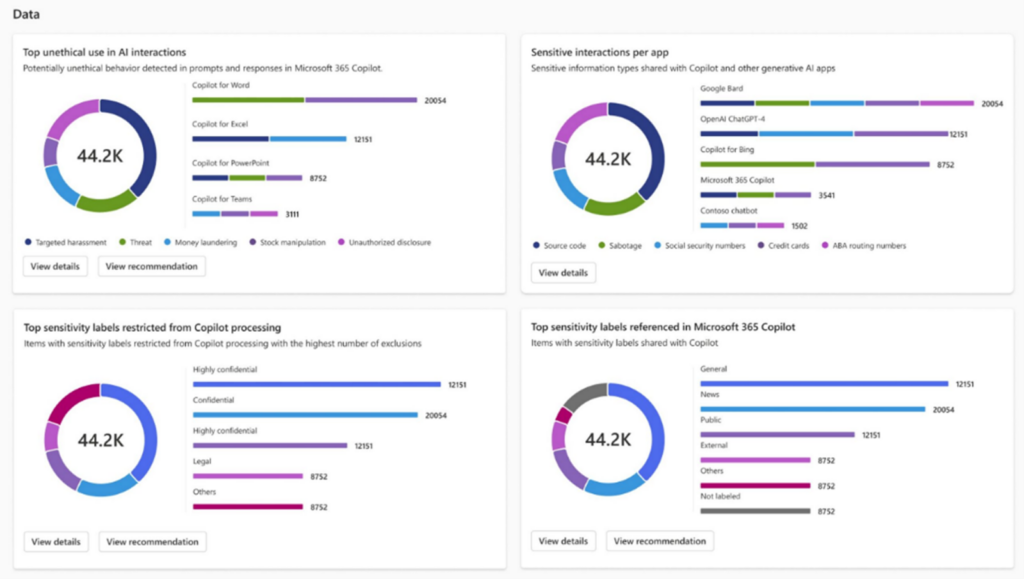

Figure 1 – Microsoft Purview Example: Visibility into Data Interactions with AI

By prioritizing these critical steps, businesses can move beyond reactive AI security measures and instead leverage AI to protect data, enforce policies, and reduce risk—before problems emerge.

Many organizations don’t realize their data is at risk until it’s too late. Don’t wait—take action now. Contact AIS today to start your AI security journey. We’ll put you on the fast path to AI readiness.