Databricks provides a robust notebook environment that is excellent for ad-hoc and interactive access to data. However, it lacks robust software development tooling. Databricks Connect and Visual Studio (VS) Code can help bridge the gap. Once configured, you use the VS Code tooling like source control, linting, and your other favorite extensions and, at the same time, harness the power of your Databricks Spark Clusters.

Configure Databricks Cluster

Your Databricks cluster must be configured to allow connections.

- In the Databricks UI edit your cluster and add this/these lines to the spark.conf:

spark.databricks.service.server.enabled true

spark.databricks.service.port 8787 - Restart Cluster

Configure Local Development Environment

The following instructions are for Windows, but the tooling is cross-platform and will work wherever Java, Python, and VSCode will run.

- Install Java JDK 8 (enable option to set JAVA_HOME) – https://adoptopenjdk.net/

- Install Miniconda (3.7, default options) – https://docs.conda.io/en/latest/miniconda.html

- From the Miniconda prompt run (follow prompts):Note: Python and databricks-connect library must match the cluster version. Replace {version-number} with version i.e. Python 3.7, Databricks Runtime 7.3

“` cmd

conda create –name dbconnect python={version-number}

conda activate dbconnect

pip install -U databricks-connect=={version-number}

databricks-connect configure

“` - Download http://public-repo-1.hortonworks.com/hdp-win-alpha/winutils.exe to C:\Hadoop

From command prompt run:

“` cmd

setx HADOOP_HOME “C:\Hadoop\” /M

“` - Test Databricks connect. In the Miniconda prompt run:

“` cmd

databricks-connect test

“`

You should see an “* All tests passed.” if everything is configured correctly.

- Install VSCode and Python Extension

- https://code.visualstudio.com/docs/python/python-tutorial

- Open Python file and select “dbconnect” interpreter in lower toolbar of VSCode

- Activate Conda environment in VSCode cmd terminal

From VSCode Command Prompt:

This only needs to be run once (replace username with your username):

“` cmd

C:\Users\{username}\Miniconda3\Scripts\conda init cmd.exe

“`

Open new Cmd terminal

“` cmd

conda activate dbconnect

“`

Optional: You can run the command ` databricks-connect test` from Step 5 to insure the Databricks connect library is configured and working within VSCode.

Run Spark commands on Databricks cluster

You now have VS Code configured with Databricks Connect running in a Python conda environment. You can use the below code to get a Spark session and any dependent code will be submitted to the Spark cluster for execution.

“` python

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

“`

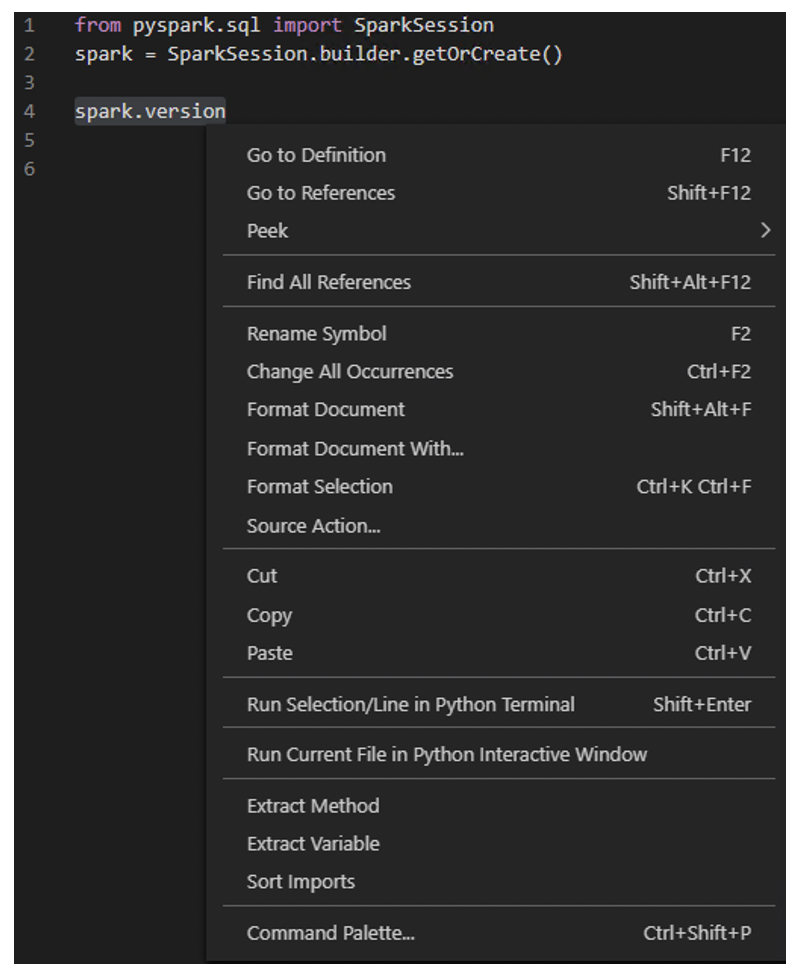

Once a context is established, you can interactively send commands to the cluster by selecting them and right-click “Run Select/Line in Python Interactive Window” or by pressing Shift+Enter.

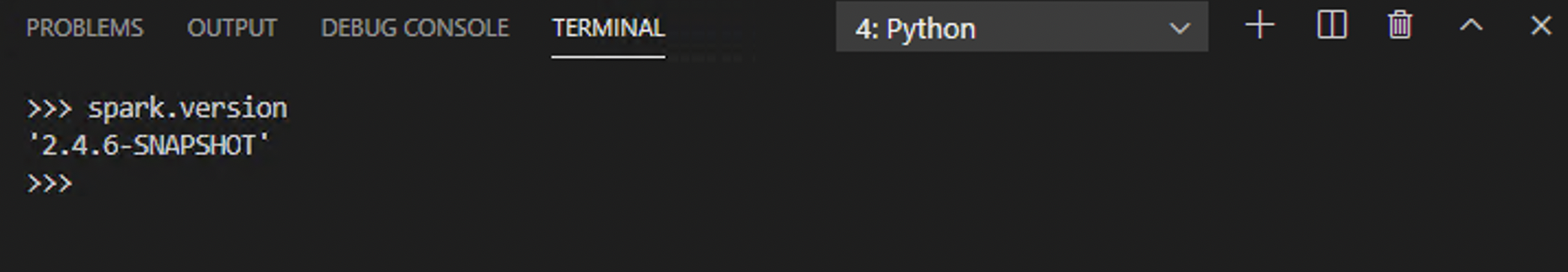

The results of the command executed on the cluster will display in the Visual Studio Code Terminal. Commands can also be executed from the command line window.

The results of the command executed on the cluster will display in the Visual Studio Code Terminal. Commands can also be executed from the command line window.

Summary

To recap, we set up a Python virtual environment with Miniconda and installed the dependencies required to run Databricks Connect. We configured Databricks Connect to talk to our hosted Azure Databricks Cluster and setup Visual Studio code to use the conda command prompt to execute code remotely. Now that you can develop locally in VS Code, all its robust developer tooling can be utilized to build a more robust and developer-centric solution.