The first AWS service (S3) was launched in September 2006. My humble journey in the cloud started in 2007, leading up to my first cloud talk in New York in 2008. In this blog post, I want to talk about what I, and we collectively, have learned from the last decade in the cloud and how to use this knowledge to drive cloud value. Let us get started.

By now, the transformational benefits of the cloud are well established. Look no further than spending data on the top three cloud providers, which was nearly 150 billion in 2021. However, current cloud spending is only a fraction of the global IT spend at 2-3 trillion dollars. This suggests that the main chapters of the cloud story are yet to be written. As is the case with the progression of any successful technology, the cloud is expected to become so pervasive that it will become invisible – just as traditional IaaS is becoming largely invisible with the advent of Kubernetes and serverless models.

Future discussions about the cloud will be less about the cloud capabilities, and more about the outcomes empower by cloud services. At some point, we can expect to stop talking about enterprise landing zones, VNETs/ VPCs, PaaS v-next, i.e., containers and serverless. I also hope that we will stop measuring our progress in the cloud using “proxy” metrics like the number of VMs migrated, number of cloud deployments, number of workloads moved to the cloud, Cloud policy violations, and more. Later in this blog, we will talk about cloud transformation metrics that matter most at the end of the day.

But first, let us get back to the theme of this blog post – driving cloud value. This depends on a few things:

- Mature cloud foundation

- Cloud value stream

Mature Cloud Foundation

With cloud providers releasing close to three thousand features each year, cloud entropy is absolute. Cloud consumers, including big enterprises, don’t have the resources to take advantage of all the new features announced daily. As a result, cloud consumers need to focus on improving their foundational capabilities. Let us look at a few (non-exhaustive) foundational capabilities.

- Consistency – Pick a few (3-5) architectural blueprints that will support 80% of the application types. Ensure that users can provision these architectural blueprints consistently, quickly, and in a self-service manner using a service catalog, like Zeuscale. The service catalog, in turn, is powered by a collection of robust automation scripts that address cross-cutting objectives. Here are a few attributes to consider:

- Resilience – spreading the subsystems across availability zones

- Observability – automatically enabling monitoring machinery

- Security – a zero-trust approach that assumes breach

- It is crucial that you treat the above-mentioned automation assets as first-class code artifacts and invest in their continual improvement.

- Cost Optimization – Too many organizations are witnessing unplanned increases in their cloud bills. Worst, it has been suggested that 30% of all cloud consumption can be attributed to waste. Cloud cost maturity comes with cloud maturity. Ensure that you have enough governance in place, including resource tags, provisioning policies, reservations for long-term resource use, monitoring & alerts, and detailed usage reporting at a level of granularity that matches your organizational hierarchy. Doing so will allow you to catch unplanned cloud expenditure and improve your ability to predict costs over time.

- Security – Resources provisioned in the cloud are dynamic, and so is security monitoring. Security monitoring does *not* end with provisioning a compliant architectural blueprint. In fact, it starts with the provisioning step. You will need automation jobs that continuously monitor your applications’ security configurations and security policies to prevent “drift” from a compliant state.

- Data Gravity – In 2013, Dave McCrory proposed the software concept of “data gravity,” where applications, services, and business logic in software applications will move physically closer to where data is stored. As you can imagine, data gravity is a critical consideration for companies with a multi-cloud** setup i.e it is hard to spread applications across clouds because of data gravity. One way to dent the data gravity challenge is to have a data-sharing strategy in place. Data sharing can be based on copy / in-place access of datasets and can span across single or multiple clouds.** Almost 80% of Fortune 500 companies find themselves in a multi-cloud setup. These companies are provisioning similar technology stacks across more than one cloud provider.

- Center of Excellence – We talked about settling on a small set of architecture blueprints. You will need to invest in a forward-looking CoE group that continues to track the advances in the cloud and ensures that your organization is not caught flat-footed in the face of a disruptive new cloud capability or an architecturally significant improvement to an existing service. Without a CoE team focused on tracking and evaluating new capabilities in the cloud, you are likely to accrue cloud debt rapidly.

- Inclusiveness – Cloud is not just for professional developers and infrastructure engineers. An inclusive cloud strategy needs to support the needs of a growing community of citizen developers as well. Constructs like self-service provisioning and architectural blueprints that we discussed earlier need to be accessible to citizen developers. For example, it should be seamless for citizen developers to mix and match low / no-code constructs with advanced cloud platform construct.

- Data Analytics – As you plan to migrate/reimagine your applications in the cloud, recognize the immense potential of the data being collected. By planning for data ingestion, data transformation upfront, you can help bridge the divide between operations and analytics data. Architectures like the data mesh that think of data (operations and analytics) as the product are headed in this direction.

- Cloud Operating Model – Your traditional infrastructure, networking, and security teams must embrace a cloud operating model. They must rely on modern development practices of iterative development, DevSecOps, maintaining infrastructure/network/security as code. You cannot succeed in the cloud with a traditional IT operating mindset.

- Continuous Learning – Your organization may have become fluent in the cloud basics, but you will need continuous learning and upskilling programs to reach the next level. Only an organization that embeds a culture of learning can truly achieve its cloud transformation goals.

- Sandbox – Along with upskilling programs, cloud teams need the freedom to experiment and fail. This is where a cloud sandbox unencumbered by enterprise cloud security policies is essential for innovation. It should be possible for teams to experiment with any new, fast arriving preview capabilities within hours (not weeks or months).

Focus on Cloud Value Stream

Working on the cloud foundation alone will not be enough to leverage all the benefits cloud has to offer. You will need to consider the entire cloud value stream – a set of actions from start to finish that bring the value of the cloud to an organization. Cloud value streams allow businesses to specify the value proposition that they want to realize from the cloud.

Align cloud strategy with business objectives

The key idea is to start with a business strategy that can help realize the value proposition, then map that strategy into a list of cloud services. The list of cloud services, in turn, determines your cloud adoption plan. Let us break this down.

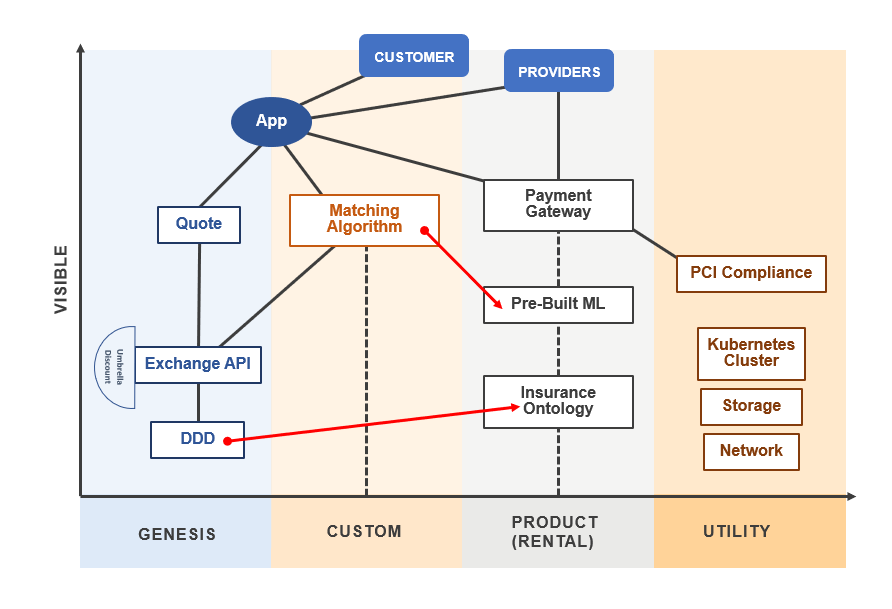

One of my favorite tools to develop a business strategy is a Wardley map. Wikipedia describes a Wardley map as a set of components positioned within a value chain (vertical axis) and anchored by the user need, with movement defined by an evolution axis (horizontal axis). Don’t worry if you are feeling a bit lost with the definition. A simple example can help. Let us assume that business leaders of fictitious financial services companies want to set up an insurance exchange in the future.

Starting from the perspective of the user of the insurance exchange, you can create a Wardley map, as shown in the diagram below.

Mapped along the value chain (vertical axis) are the value-line capabilities of the insurance exchange. These capabilities are pegged on an evolution axis (horizontal axis) that represents the evolution of the components from genesis (high value) to utility (commodity).

A map like this allows you to organize your cloud investments. For example, the Matching Algorithm that pairs incoming purchase requests with the insurance providers may need to be a custom-built capability. A custom-built capability requires additional investment, but it also offers a differentiator and potentially higher profit. In the future, the previously mentioned matching capability may become available as a pre-built ML product or rental capability through evolution. So, there is indeed a risk of commoditization. But the question is – how soon can that happen? Wardley maps excel in engendering discussion across various teams into a single map.

The End-to-End Flow of Business Value

Earlier in this post, we talked about “proxy” metrics such as the number of VMs or workloads migrated to the cloud. While these metrics are helpful as IT and agile metrics, they fail to communicate the overall progress of a cloud transformation effort from the perspective of business outcomes. This is where Flow Framework®, introduced by Dr. Mik Kersten, comes in.

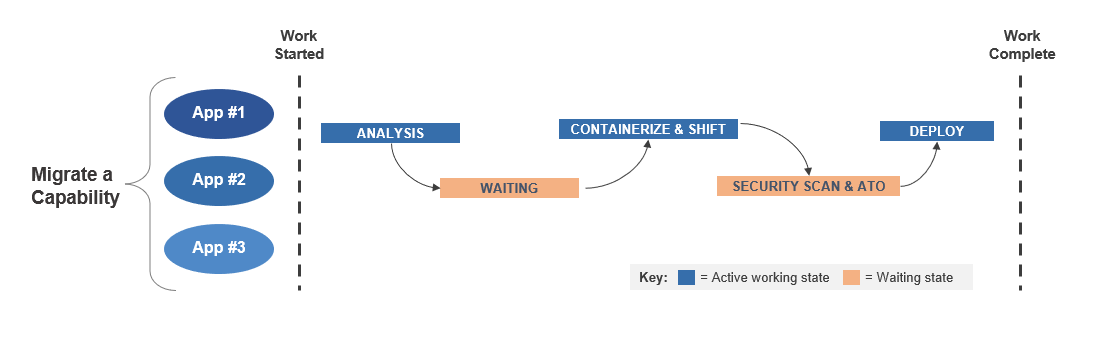

The core premise of the Flow Framework is the need to measure the end-to-end flow of business value and what results it produces. Flow Metrics measures the flow of business value through all the activities involved in producing business value through a software value stream. For example, consider the following chart depicting the Flow Efficiency metric. Flow Efficiency is the ratio of active time out of the total Flow Time.

A few things to note in the diagram below:

- We are measuring end-to-end for the migration time of the application.

- Additionally, we are considering the entire capability area and not an individual app.

- The process of containerizing the app seems to be quick, but we are spending a significant time on security scanning and Authority to Operate (ATO) certification – not surprising for a highly regulated environment with very stringent security expectations.

Perhaps we need to make an upfront down payment on “technical debt” associated with security scanning and certification. Doing so would improve the flow efficiency of cloud migration.

In summary, to drive cloud value, you need a robust cloud foundation, as well as a keen eye towards the overall cloud value stream. Focusing on a few well-defined architectural blueprints will accord you the opportunity to mature in the areas of cloud costs, automation, and readiness. Focusing on the overall cloud value stream will ensure that your cloud investments are aligned with your business strategic goals.

Contact AIS to talk cloud strategy and business objectives.