With the rise in popularity and widespread adoption of containers, Azure offers a suite of services designed to cater to diverse containerized workloads. Containers have revolutionized the way applications are developed, deployed, and managed, providing portability, efficiency, and scalability. Among Azure’s container offerings are Azure Container Instances (ACI), Azure Container Apps (ACA), and Azure Kubernetes Service (AKS), each bringing unique capabilities for organizations to take advantage of. In this article, we will review these three container solutions, providing an overview of their features and highlighting the key differences and use cases for each. Whether you are seeking simplicity, a serverless container experience, or robust orchestration, understanding these services will help you make informed decisions for your containerized applications.

Azure Container Instances: On-demand Containerized Workloads

Azure Container Instances (ACI) is the simplest of the Azure container offerings, in my opinion. ACI is a serverless platform that allows you to quickly deploy containers to the cloud with minimal required infrastructure, making it a great candidate for use cases such as developers and testers needing to deploy an on-demand container or batch processing jobs requiring minimal infrastructure. The benefit of ACI is that it’s billed on a per-second basis, making it an economically viable option for containers that do not need to be running all the time on dedicated workloads.

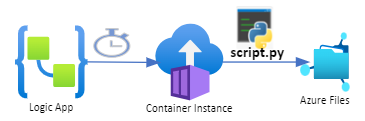

For example, consider a developer who wants to quickly deploy and later destroy a standalone container once per release cycle as part of a regression testing process. Integrating CI/CD pipelines with Azure would allow developers to quickly push a container to ACI, run regression tests, and destroy the container without incurring significant costs. Batch processing is another good example: ACI integrates with other common Azure components, such as Key Vaults, Storage, and Virtual Networks, to securely connect to storage accounts and process files. Functions or Logic Apps can be used to trigger the deployment and destruction of the ACI.

Example: Logic App executing an ACI to process files on a file share.

While ACI allows for simplicity and speed, there are limitations to the service. The lack of automated scaling and full orchestration of containers are the two biggest reasons organizations would not want to use ACI for workloads requiring complex infrastructure requirements. For more information on ACI, you can view the Microsoft documentation here.

Azure Container Apps: Scalable Serverless Containerization

Azure Container Apps (ACA) is a serverless container platform on Azure that simplifies the deployment and management of containerized applications. Built on Kubernetes, ACA provides many of the benefits of Kubernetes without the complexities of managing the underlying infrastructure, allowing developers to focus on building and deploying scalable applications. The following are some examples of workloads that Azure Container Apps would benefit from:

- Web Applications – ACA supports various web application frameworks and languages, such as ASP.NET, Node.js, Python Django, and Ruby on Rails. It enables automatic scaling of web applications based on traffic demands, ensuring high availability and performance during peak usage.

- APIs and Microservices – ACA seamlessly integrates with other Azure services, such as Azure Functions, Azure Storage, and Azure Cosmos DB, for building scalable and resilient APIs. Its Kubernetes foundation enables easy deployment and management of microservices, with features like service discovery and load balancing.

- Event-Driven Applications—ACA integrates with Azure Event Grid to build event-driven applications, enabling automatic scaling and responsiveness to events. Its serverless nature reduces management overhead for event-driven applications, allowing developers to focus on writing event handlers and business logic.

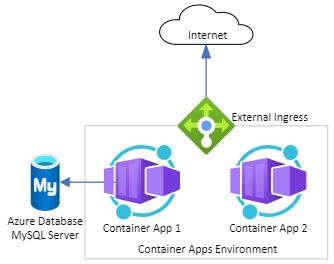

Example: Web applications running on ACA.

Like ACI, Azure Container Apps is billed per second, charging for the compute and memory resources consumed. By leveraging features such as autoscaling, ACA optimizes costs by incurring higher fees only during periods of high demand and achieving cost savings during low demand through scaling down.

While ACA offers a simple, scalable way to deploy containerized applications, there may be use cases where more resiliency and control over the underlying infrastructure are required. In these cases, it may be beneficial to consider other solutions, such as Azure Kubernetes Services. For more information on ACA, you can view the Microsoft documentation here.

Azure Kubernetes Services: Full Container Orchestration

Many organizations have complex workloads that demand robust capabilities. Production-level services often require high availability, resilience, and scalability, which the ACI and ACA offerings cannot fully provide. These workloads benefit from a fully orchestrated container platform like Azure Kubernetes Service (AKS). In the context of containers, orchestration refers to the automation of managing containerized workloads across multiple Kubernetes nodes. This management includes tasks such as pod scheduling and re-scheduling, service creation and discovery, storage orchestration for stateful workloads, and pod/node autoscaling. Two key areas where AKS is better suited for enterprise workloads are high-availability features and advanced service discovery. Below, we will review these features in more detail.

High Availability – One main benefit of the AKS platform is workload resiliency at multiple points to ensure critical production applications stay online. While ACI and ACA integrate some of these features, AKS offers them all in a more robust manner.

- Node availability groups – Nodes in AKS are individual virtual machines (VMs) that run containerized applications. These nodes are organized into groups called virtual machine scale sets. VM scale sets can be distributed across multiple Azure data centers within a region, ensuring resiliency from localized hardware failures within an Azure region.

- Cluster Self-healing – AKS clusters automatically manage containerized workloads to ensure they stay online. Containers are scheduled on a node with enough resources and automatically restarted in case of failure. If a pod (a group of one or more containers) is evicted due to an underlying node issue, the cluster will reschedule the container to a new node.

- Horizontal node autoscaling – AKS clusters are composed of node pools, which are collections of nodes (VMs) running Kubernetes. These node pools can be configured to automatically scale up and down, ensuring sufficient resources are always available for the workloads assigned to them.

- Horizontal pod autoscaling – organizations running AKS can leverage the native Kubernetes Horizontal Pod Autoscaler (HPA) feature to automatically adjust the number of pod replicas in a deployment or replica set based on observed metrics, such as memory utilization, to ensure optimal performance and resource efficiency.

- Taints, Tolerations, and Node Selectors – AKS allows you to apply taints to nodes and tolerations to pods, ensuring that certain pods are scheduled on specific nodes. Additionally, node selectors can further refine pod placement based on node labels. This capability helps distribute workloads across nodes, improving high availability by reducing the impact of node failures.

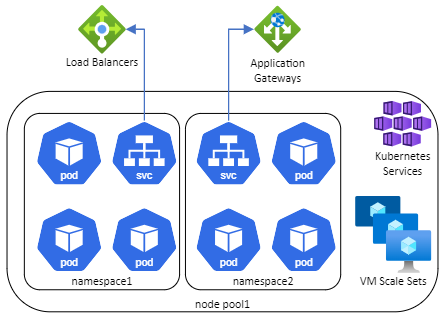

Advanced Service Discovery – In Azure Kubernetes Service (AKS), service discovery is a fundamental feature that ensures seamless communication between services and containerized workloads within the Kubernetes cluster. Each service deployed in AKS is assigned a unique DNS name, enabling other services to easily discover and communicate with it. AKS supports namespaces, which provide service isolation while still allowing inter-service communication within the cluster. This setup offers significant advantages over Azure Container Apps (ACA), where applications must reside within the same environment to communicate, limiting flexibility in service isolation and communication. Furthermore, AKS offers robust integration with Azure’s native load balancing services, including Azure Load Balancers and Application Gateways, to expose services externally in a more flexible and scalable manner.

Example: Namespace isolation, communication, and service expose in AKS.

While AKS offers robust orchestration features and fine-grained control over infrastructure, it is important to note that this control comes with added management overhead and required expertise, which operations teams must be prepared for. Additionally, AKS has a billing model different from ACA and ACI, as it charges for VM usage and associated disks. AKS is billed hourly based on the VM SKU sizes and the number of nodes in a cluster, with additional charges for the disk storage used by the nodes. For more information on AKS, you can view the Microsoft documentation.

Summary

Whether you need a lightweight, serverless approach or a fully orchestrated container platform, Azure offers a solution tailored to your needs. Choosing the right container solution on Azure depends on your specific requirements and the nature of your workloads. As discussed in this article, consider factors such as cost, resiliency, scalability, maintainability, and service integration when selecting the solution that best fits your workload.