Note: This blog post is *not* about Kubernetes infrastructure API (an API to provision a Kubernetes cluster). Instead, this post focuses on the idea of Kubernetes as a common infrastructure layer across private and public clouds.

Kubernetes is, of course, well known as the leading open-source system for automating deployment and management of containerized applications. However, its uniform availability, is for the first time, giving customers a “common” infrastructure API across public and private cloud providers. Customers can take their containerized applications, Kubernetes configuration files, and for most parts, move to another cloud platform. All of this without sacrificing the use of cloud provider-specific capabilities, such as storage and networking, that are different across each cloud platform.

At this time, you are probably thinking about tools like Terraform and Pulumi that have focused on abstracting underlying cloud APIs. These tools have indeed enabled a provisioning language that spans across cloud providers. However, as we will see below, Kubernetes “common” construct goes a step further – rather than be limited to a statically defined set of APIs, Kubernetes extensibility allows or extends the API dynamically through the use plugins, described below.

Kubernetes Extensibility via Plugins

Kubernetes plugins are software components that extend and deeply integrate Kubernetes with new kinds of infrastructure resources. Plugins realize interfaces like CSI (Container Storage Interface). CSI defines an interface along with the minimum operational and packaging recommendations for a storage provider (SP) to implement a compatible plugin.

Another example of interfaces includes:

- Container Network Interface (CNI) – Specifications and libraries for writing plug-ins to configure network connectivity for containers.

- Container Runtime Interface (CRI) – Specifications and libraries for container runtimes to integrate with kubelet, an agent that runs on each Kubernetes node and is responsible for spawning the containers and maintaining their health.

Interfaces and compliant plug-ins have opened the flood gates to third-party plugins for Kubernetes, giving customers a whole range of options. Let us review a few examples of “common” infrastructure constructs.

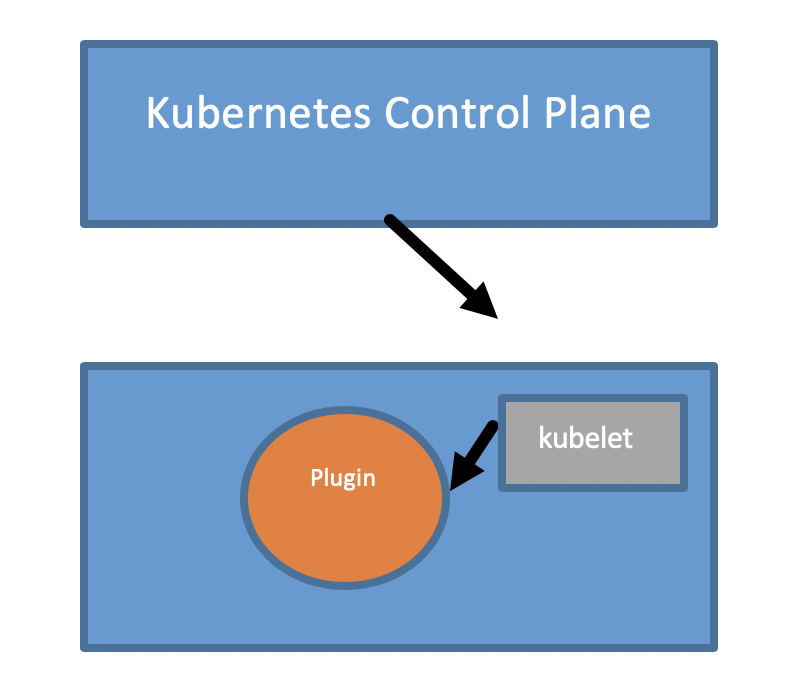

Here is a high-level view of how a plugin works in the context of Kubernetes. Instead of modifying the Kubernetes code for each type of hardware or a cloud provider offered service, it’s left to the plugins to encapsulate the knowhow to interact with underlying hardware resources. A plugin can be deployed to a Kubernetes node as shown in the diagram below. It is the kubelet’s responsibility to advertise the capability offered by the plugin(s) to the Kubernetes API service.

“Common” Networking Construct

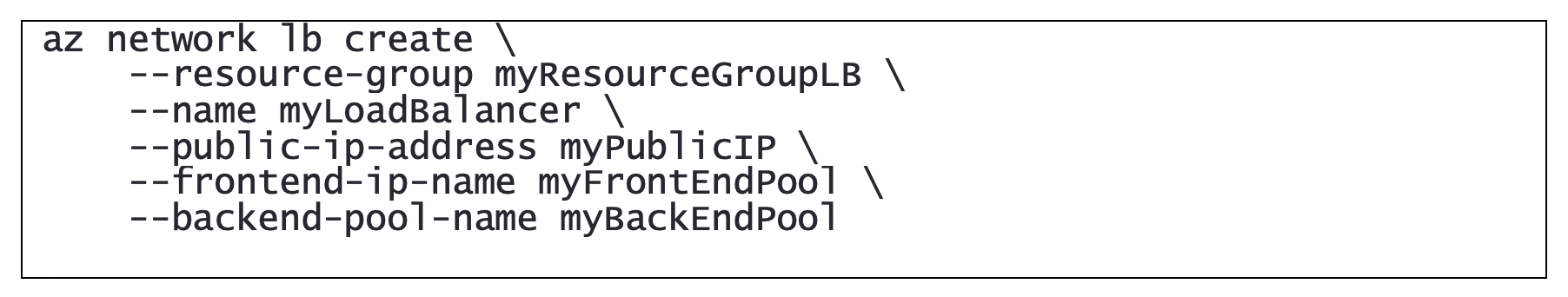

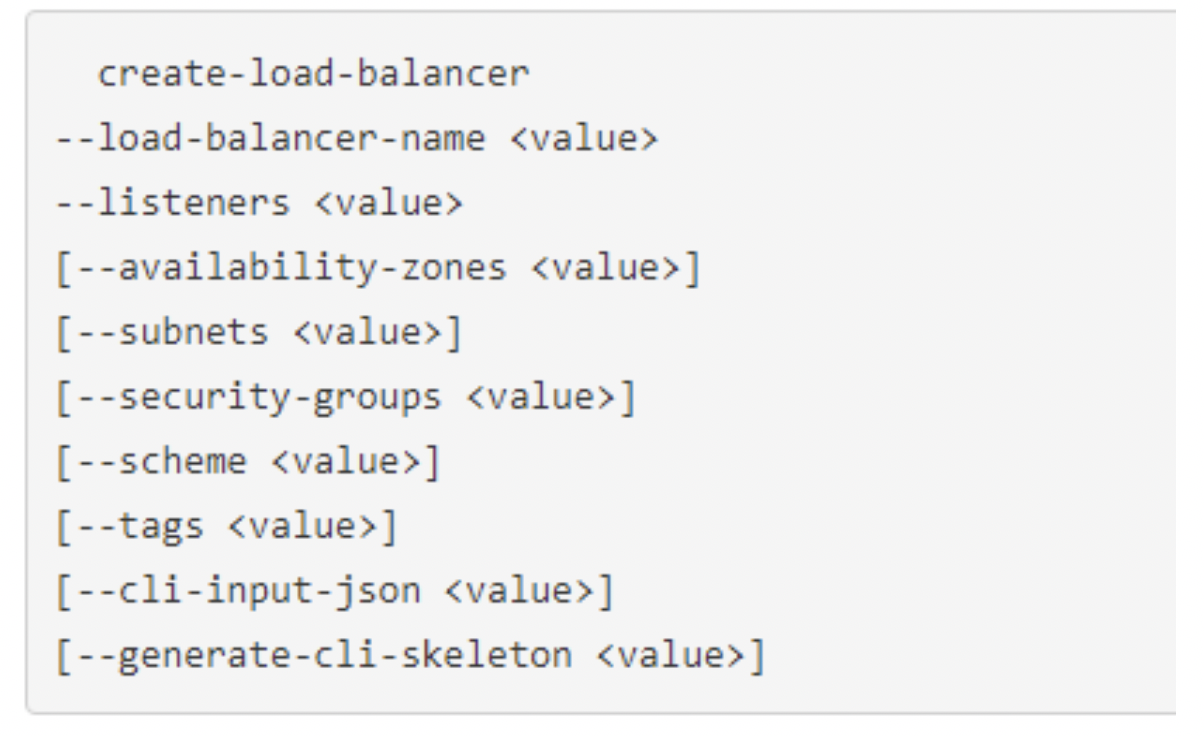

Consider a networking resource of type load balancer. As you would expect, provisioning a load balancer in Azure versus AWS is different.

Here is a CLI for provisioning ILB in Azure:

Likewise, here is a CLI for provisioning ILB in AWS:

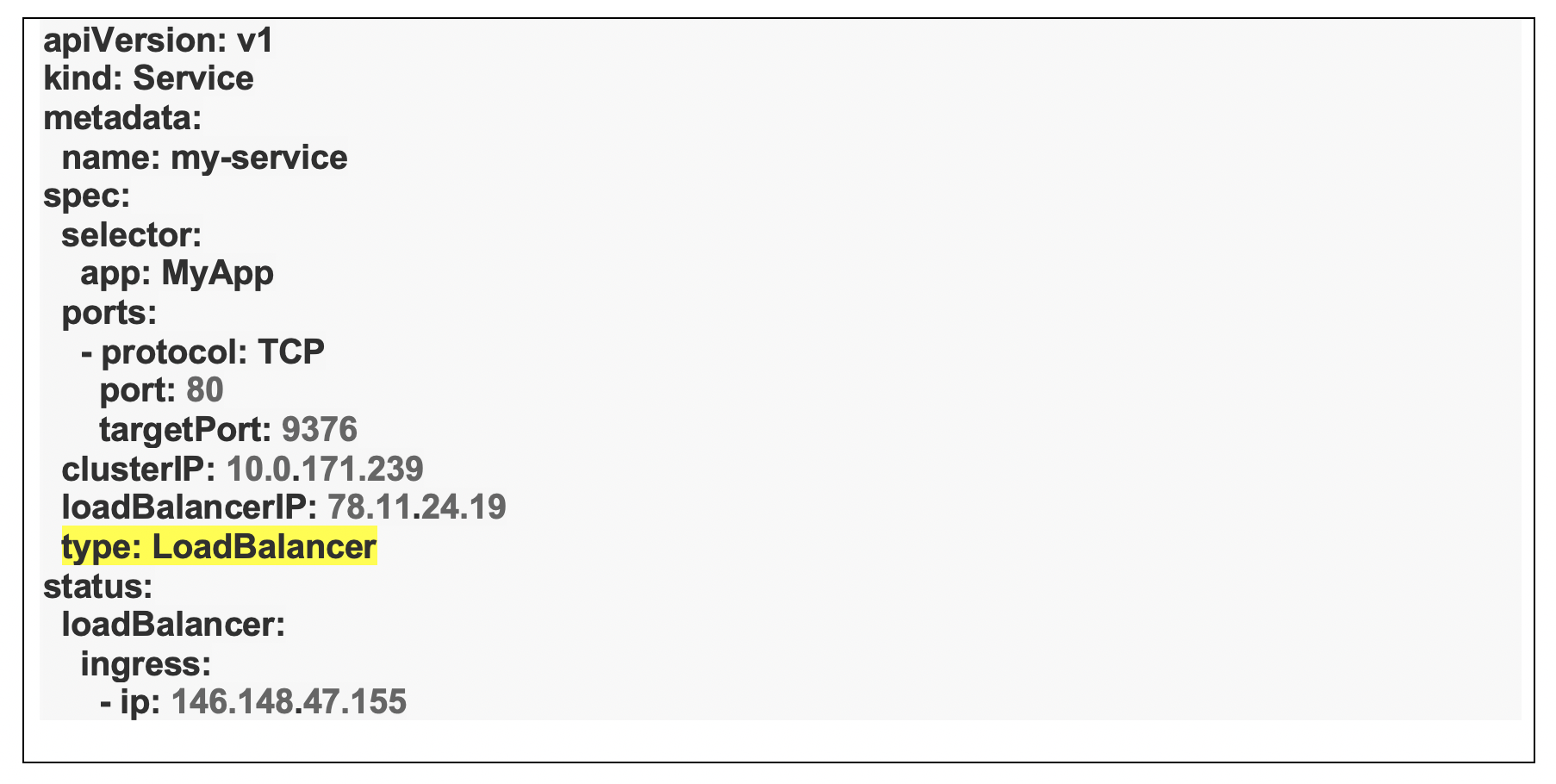

Kubernetes, based on the network plugin model, gives us a “common” construct for provisioning the ILB that is independent of the cloud provider syntax.

“Common” Storage Construct

Now let us consider a storage resource type. As you would expect, provisioning a storage volume in Azure versus Google is different.

Here is a CLI for provisioning a disk in Azure:

Here is a CLI for provisioning a persistent disk in Google:

Once again, under the plugin (device) model, Kubernetes gives us a “common” construct for provisioning storage that is independent of the cloud provider syntax.

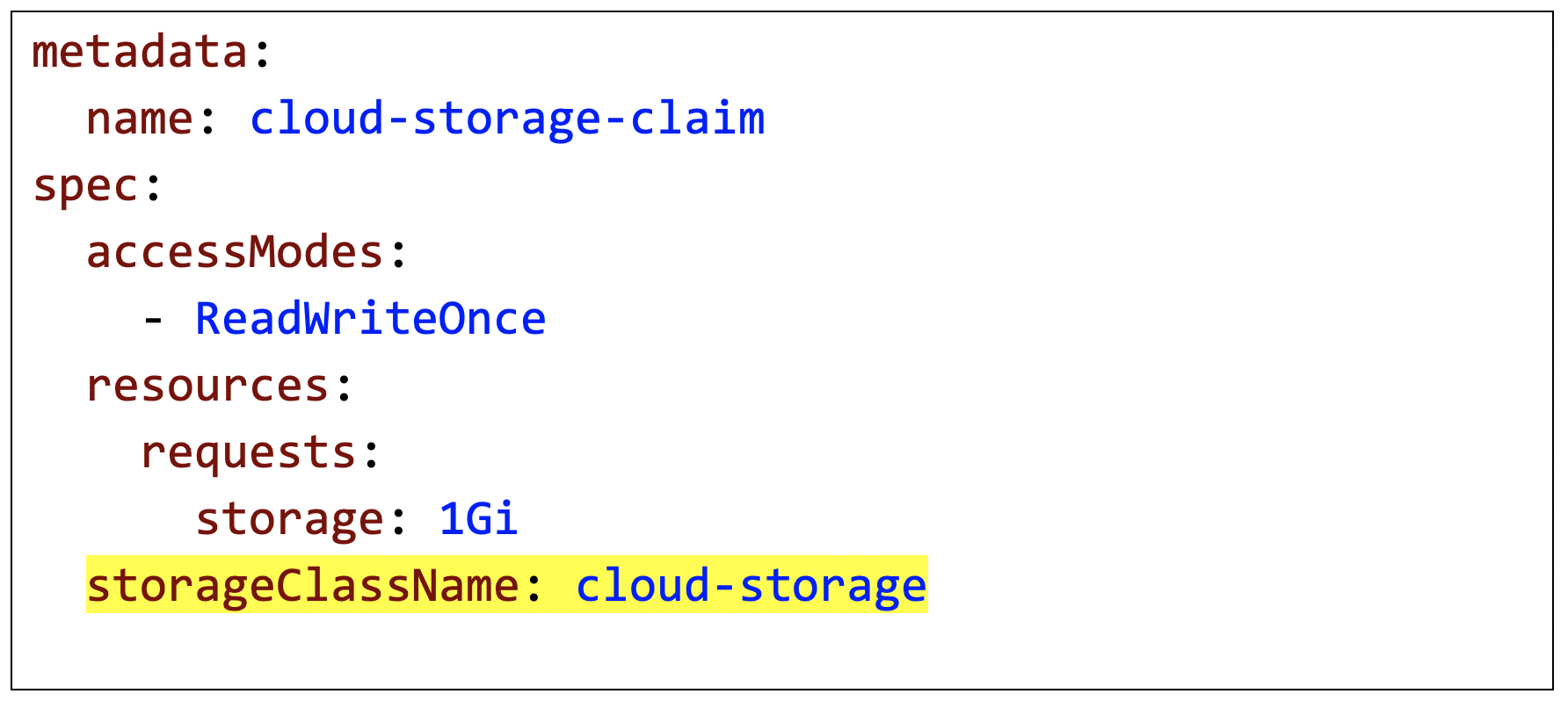

In the example below, of “common” storage construct across cloud providers. In this example, a claim for a persistent volume of size 1Gi and access mode “ReadWriteOnce” is being made. Additionally, storage class “cloud-storage” is associated with the request. As we will see next, the persistent volume claims decouple us from the underlying storage mechanism.

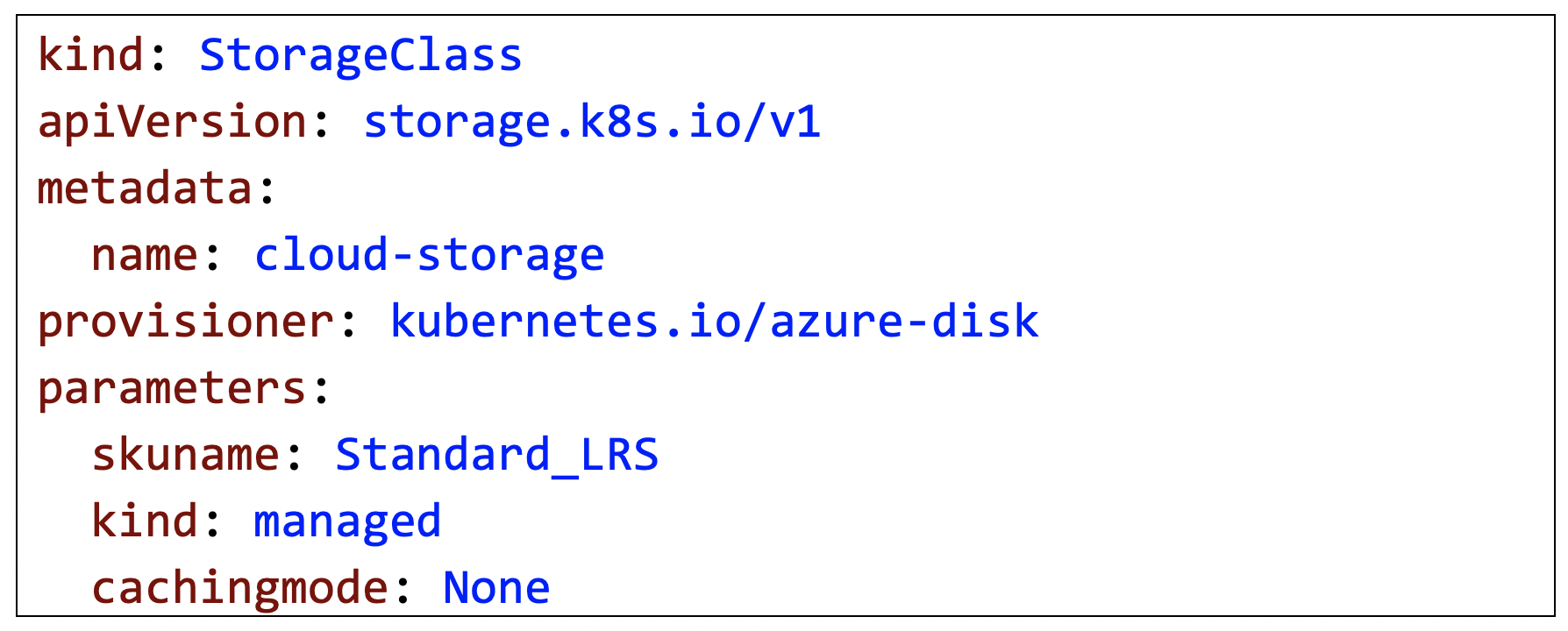

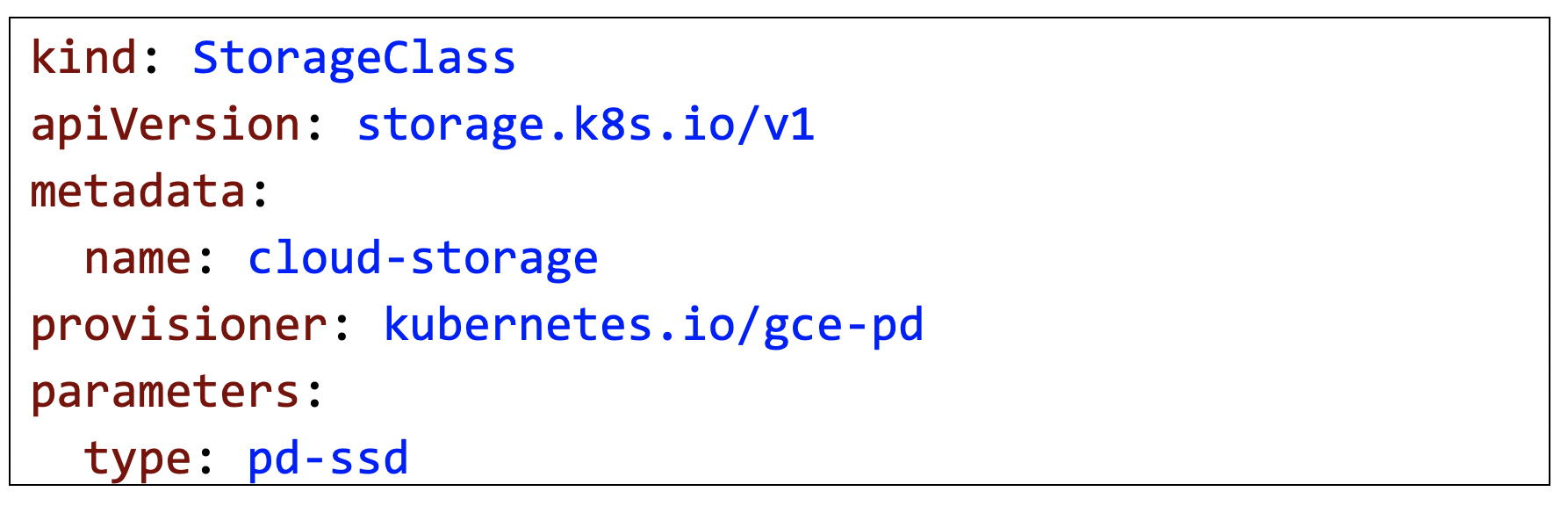

The StorageClass determines which storage plugin gets invoked to support the persistent volume claim. In the first example below, StorageClass represents the Azure Disk plugin. In the second example below, StorageClass represents the Google Compute Engine (GCE) Persistent Disk.

“Common” Compute Construct

Finally, let us consider a compute resource type. As you would expect, provisioning a compute resource in Azure versus GCE is different.

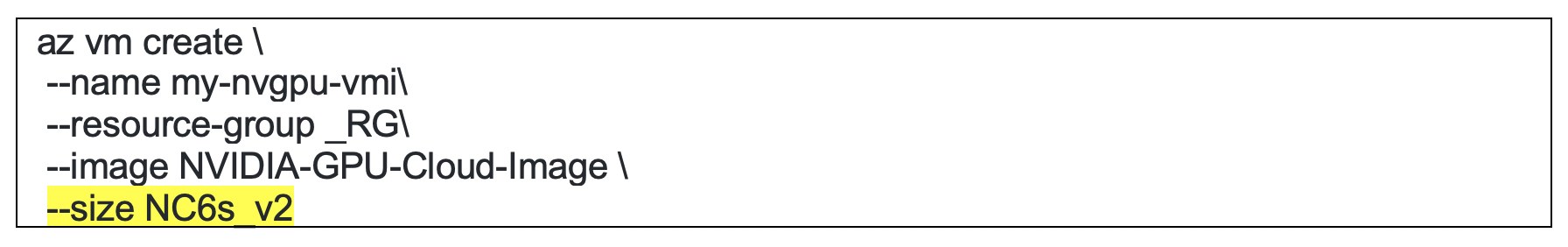

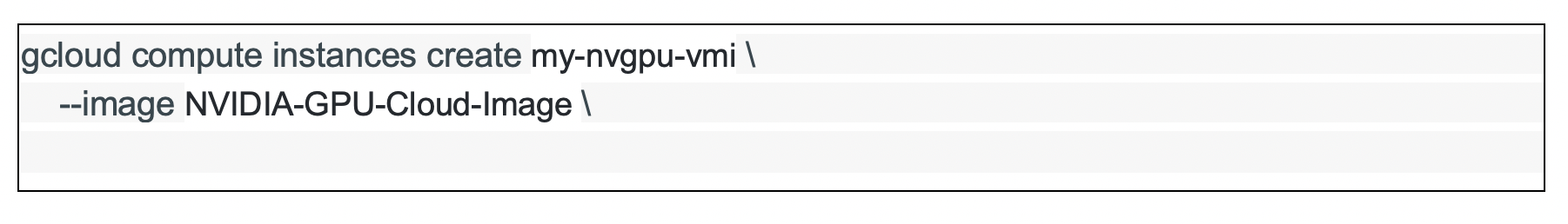

Here is a CLI for provisioning a GPU VM in Azure:

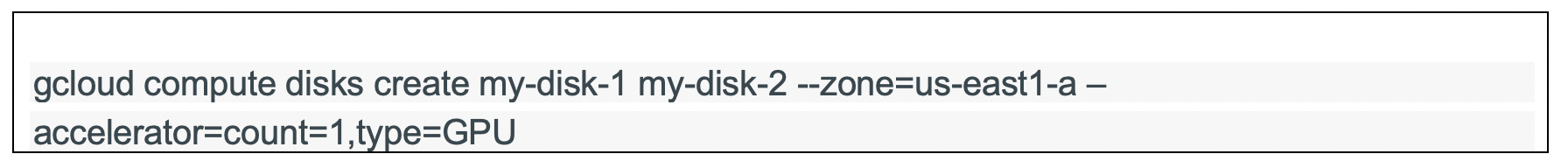

Here is a CLI for provisioning a GPU in Google Cloud:

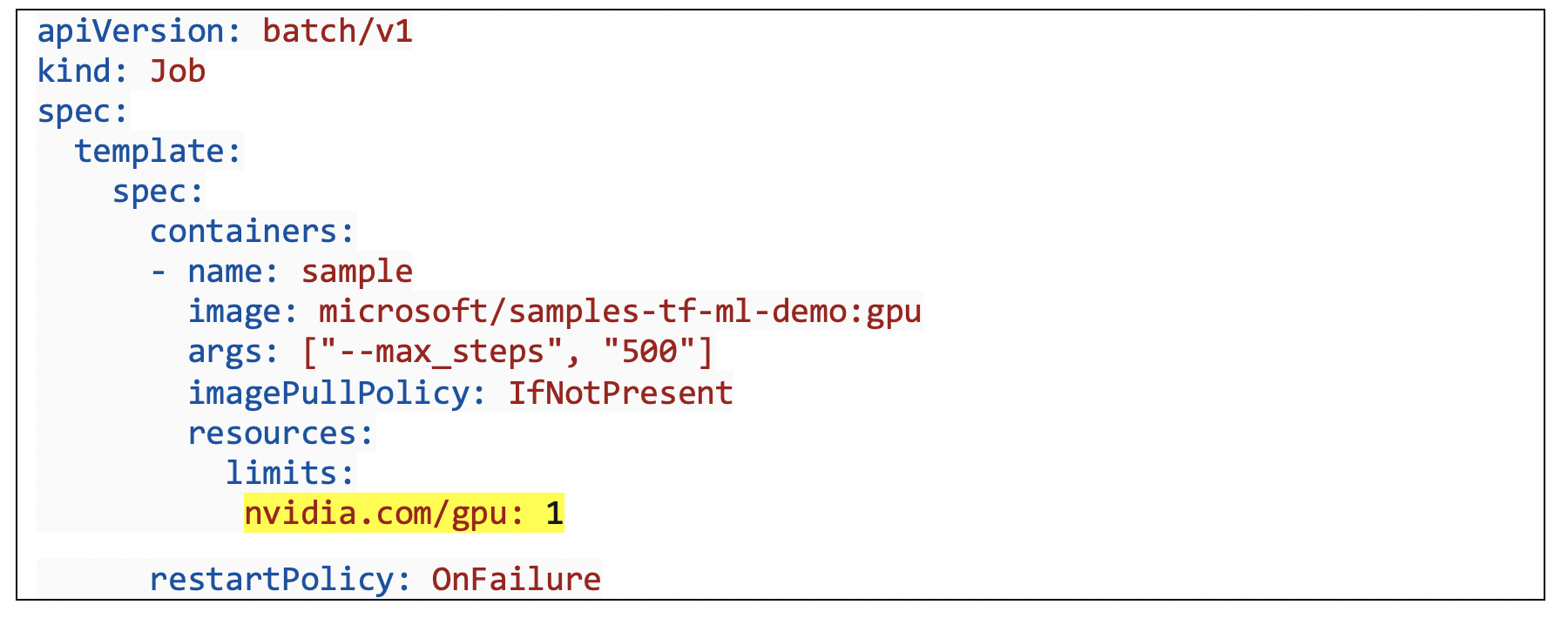

Once again, under the plugin (device) model, Kubernetes, gives us a “common” compute construct across cloud providers. In this example below, we are requesting a compute resource of type GPU. An underlying plugin (Nvidia) installed on the Kubernetes node is responsible for provisioning the requisite compute resource.

Source: https://docs.microsoft.com/en-us/azure/aks/gpu-cluster

Summary

As you can see from the examples discussed in this post, Kubernetes is becoming a “common” infrastructure API across private, public, hybrid, and multi-cloud setups. Even traditional “infrastructure as code” tools like Terraform are building on top of Kubernetes.