I recently built a machine learning model, trained it, and explored the implications of deploying it using Kubernetes. Machine learning trains a program to recognize patterns in data so when new data is provided, it can make predictions based on what it’s learned. Kubernetes is a container orchestrator that automates the deploying and scaling of containers. Packaging a machine learning program and deploying it on Kubernetes has the potential to help our customers with their increasingly complex machine learning needs.

I recently built a machine learning model, trained it, and explored the implications of deploying it using Kubernetes. Machine learning trains a program to recognize patterns in data so when new data is provided, it can make predictions based on what it’s learned. Kubernetes is a container orchestrator that automates the deploying and scaling of containers. Packaging a machine learning program and deploying it on Kubernetes has the potential to help our customers with their increasingly complex machine learning needs.

First, I determined what I wanted to accomplish with machine learning, the tools I needed, and how to put them together. Teaching a machine how to recognize imagery is fascinating to me, so I focused on image recognition. I found the PlanesNet dataset on Kaggle — a dataset focused on recognizing planes in aerial imagery. The dataset would be simple enough to implement but have good potential for further exploration.

To build a proof of concept I used TensorFlow, TFLearn, and Docker. TensorFlow is an open-source machine learning library and TFLearn is a higher-level TensorFlow API which aids in writing less code to get started. TFLearn is a Python library, so I wrote my proof of concept in Python. Docker was used to package the program into a container to run on Kubernetes.

Machine Learning

The PlanesNet dataset has 32,000 color images, each at 20px by 20px. There are 8,000 images classified as “plane” and 24,000 images classified as “no-plane.” Reading in the dataset and preparing it for processing was straightforward using Python libraries like Pillow and NumPy. Then I broke the dataset up into a training set and a testing set using the train_test_split function in the sklearn Python library. The training set was used to build the model weights while the testing set validated how well the model was trained.

Neural Network

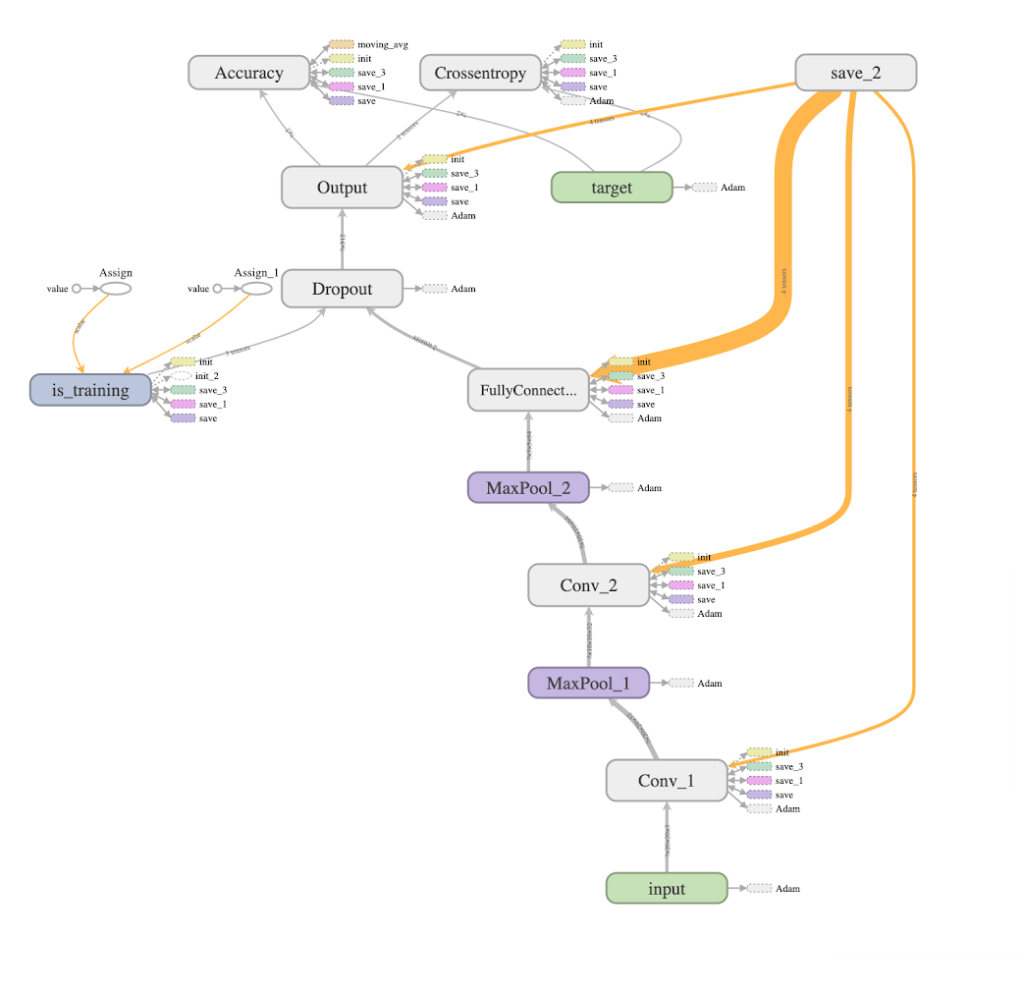

One of the most complex parts was how to design my simple neural network. A neural network is broken into layers starting with the input layer, then one or more hidden layers, ending with the output layer.

Creating the input layer, I defined its shape, preprocessing, and augmentation. The shape of the PlanesNet data is a 20px by 20px by 3 colors matrix. The preprocessing allowed me to tweak preparing the data for both training and testing while the augmentation allowed me to perform operations (like flipping or rotating images) on the data while training.

Because I was working with imagery, I chose to use two convolutional hidden layers before arriving at the output layer. Convolutional layers perform a set of calculations on each pixel and its surrounding pixels, attempting to understand features (i.e., lines, curves) of an image. After my hidden layers, I introduced a dropout percentage rate, which helps to reduce overfitting.

Next, I added a readout layer which brought my neural network down to the number of expected outputs. Finally, I added a regression layer to specify a loss function, optimizer, and learning rate. With this network, I could now train it over multiple iterations, called epochs, until I reached the desired level of prediction accuracy.

Simple Neural Network

Using this model, I could now predict if an image was of an airplane. I loaded the image, called the predict function and viewed the output. The output gave me a percentage of likelihood that the image was an airplane.

Exploring Scaling

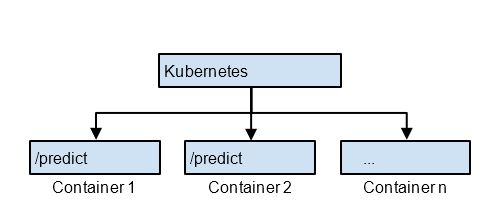

Now that I had my simple neural network for training and predicting, I packaged it into a Docker container so it could be run on more than my single computer. As I examined the details of deploying to Kubernetes, two things quickly became apparent. First, my simple neural network would not train across multiple container instances. Second, my prediction program would scale well if I wrapped it in a web API.

For my simple neural network, I had not implemented anything to split a dataset, train multiple containers, and bring the results back together. Therefore running multiple instances of my container using Kubernetes would only provide the benefit of choosing the container with the highest accuracy model. (The dataset splitting process along with the input augmentation causes each container to have different accuracies.) To have multiple containers which coordinate learning in my simple neural network would require further design.

My container’s prediction program executes via the command line but wrapping it in a web API endpoint it would make it easier to use. Since each instance of the container has the trained model, Kubernetes could scale up or down the number of running instances of my container to meet the demand of the web API endpoint. Kubernetes also provides a method for rolling out updates to my container if I further train my network model.

Conclusion

This was an excellent exercise in building a machine learning model, training it to predict an airplane from aerial imagery, and deploying it on Kubernetes. Additional applications could include expanding the dataset to include different angles of planes along with recognizing various specific types of planes. It would also be beneficial to re-design the neural network to benefit from Kubernetes scaling to the training needs. Using Kubernetes to deploy a prediction API-based on a trained model is both beneficial and practical today.