At a recent holiday dinner, a conversation with a friend eventually progressed to the topics of self-driving cars and facial-recognition software – and the overall roles and capabilities of artificial intelligence (AI). My friend’s assertion was that “AI is ultimately about pattern matching.” In essence, you equip the AI with a library of “patterns” and their corresponding associated actions. Based on the input it receives from the real world, the AI software program will then make an attempt to match the input to a stored pattern and execute the corresponding associated action.

At a recent holiday dinner, a conversation with a friend eventually progressed to the topics of self-driving cars and facial-recognition software – and the overall roles and capabilities of artificial intelligence (AI). My friend’s assertion was that “AI is ultimately about pattern matching.” In essence, you equip the AI with a library of “patterns” and their corresponding associated actions. Based on the input it receives from the real world, the AI software program will then make an attempt to match the input to a stored pattern and execute the corresponding associated action.

Of course any program, regardless of whether it is designed to steer a car or detect a face in an image, relies on pattern-matching at the lowest level. That said, as we will see shortly, a deep learning-based approach is a fundamentally different way to solve the problem. And it’s an approach that is poised to reinvent computing.

Let’s think through the sequence of events needed to accomplish the task of recognizing a face within an image. One approach would be to iteratively decompose the input image and look for specific patterns such as “an eye on the top left,” “a nose in middle,” or “a mouth in the middle.” If there is a pattern match for any of these characteristics, we’ll tell our program to recognize that there’s a face here. Of course, this logic suffers from many deficiencies. Perhaps a portion the face has been cut off or cropped out, or maybe another object in the picture is obscuring some of the features. Mind you, we have not even begun to consider the myriad and minute facial variations among the human species. The sheer number of patterns can quickly become computationally intractable.

Fortunately, some of the decade’s latest advancements in artificial intelligence – especially neural networks – look promising. The neural nets offer us a possible approach to solve problems associated with facial detection through “training” versus “explicit programming.” Furthermore, given a training dataset, neural nets can largely learn on their own (“deep learning”). In essence, we are “outsourcing” explicit programming steps from the previous paragraph by training the program itself to look for more patterns on its own.

A neural net is a large network of neurons – think of an individual neuron as a way to model a given mathematical function. Each neuron is comprised of multiple inputs. Each input gets multiplied by a weight. If the sum of all inputs, multiplied by their corresponding weights, exceeds a certain threshold, the neuron outputs 1, otherwise it outputs 0. Adjusting the aforementioned weights is the way you adjust the function to change its behavior.

(The AI neuron primarily parallels the way a human neuron/brain cell fires in response to stimulation. However, the similarity does not extend much further – much about neuron interaction inside the human brain remains largely unknown).

An artificial neural net (ANN) is a large network of such AI neurons. And by “large,” I mean tens of millions of inputs, with multiple layers (width and depth) of neurons. For example, a high-performing ANN that recognizes images exceedingly well can potentially be comprised of 60 million+ input values. So what is going on here? Simply put, a large network of function approximators (neurons) can collectively model an image recognition function on a higher level. Here are some cool examples of things that a trained neural network can recognize: Source

Source

But how do we “train” a large network of neurons? Essentially, this means adjusting millions of weights on individual functions. This is where the training data comes in handy. We can continue to vary the weights in an attempt to minimize the discrepancy between actual and desired output. Fortunately, we can rely on mathematical concepts, such as gradient descent, to systematically vary the weights. And, in order to deal with such a large number of variables, we rely on another math concept of partial derivatives. You can explore these mathematical concepts in further detail, but suffice to understand that, by using a combination of these math tricks, a training data set, and a large computational capability, we can teach an ANN to do productive things. For a mathematical proof that an artificial neural network can approximate any function, read this paper.

As you can see, we did not write an imperative piece of pattern-matching code, requiring us to account for and convey myriad patterns to the program. Instead, the neural network programmed itself to grow and recognize patterns. Furthermore, the programming is not a series of instructions, but rather a collection of a large number of weights. And herein lies a problem – it is very hard for humans to visualize how this large neural network is trained. There is a body of work currently underway to help us better understand what the trained network looks like internally.

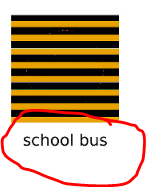

Before we close, it is also important to note that a trained neural network’s approach to recognizing a human face in a picture is very different from human vision. Ultimately, even the trained algorithm is quite dumb – it is only designed to look for certain characteristics in part of the image. It is completely oblivious to the features of the picture it is not trained to look for. In fact, there have been interesting studies to show how neural networks can be easily fooled. See the example below – taken from http://www.evolvingai.org/fooling. In this example, a trained neural network believes with a high degree of certainty that the following is a picture of a school bus…

This is one reason why I personally don’t worry too much about AI taking over the world anytime soon.

This is one reason why I personally don’t worry too much about AI taking over the world anytime soon.