The data team of the internal AIS Microsoft Power Platform Hackathon used 3 different data movement techniques to solve the hackathon use case: Dataflows, Power Query, and Power Automate. Read on to learn how we did it. There are several ways to import and export data through Microsoft Dataverse, the data backbone of Microsoft Power Platform.

What is Dataverse?

Dataverse is designed to work with any data and incorporates all the significant data technologies that any organization needs – relational, non-relational, file, image, search, and data lake. Dataverse helps to store and manage data securely. Dataverse includes a set of visual designers to create, edit, and interact with data. In Dataverse, tables are used to model and manage business data. To increase productivity, Dataverse includes a set of tables known as standard tables.

We used Dataverse as a place to store all our data related to catalogs and imported and exported data as per the scenarios.

Our Approach

Our hackathon team was tasked with migrating the data of a legacy application into Dataverse. The legacy application data was created from the eShopOnWeb project and was hosted in Azure SQL. Our approach was to break this use case down into two problems:

- Migrate the tables (schema)

- Migrate the data

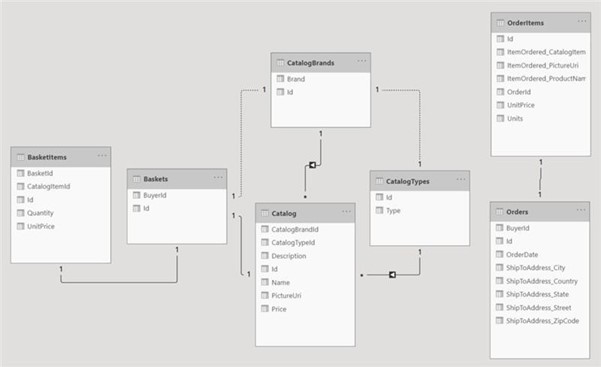

The Power BI Model View of our data structure in the screenshot below shows the entities and their relationships we needed to migrate. The schema did not need to change during the migration, representing both the source and destination data model.

LEGACY DATA CONVERSION

Accelerate your data migration project with our free white paper download.

Migrate the Tables

We evaluated 3 techniques for migrating the tables into Dataverse.

- Create the tables automatically during the data migration when using Dataflows

- Write an application that creates the columns through the Dataverse Web API

- Manually create them in the portal

Option 1: Dataflows

Tables can be created in the process of moving data into Dataverse using Dataflows. This is only an option if you do not need to modify the schema and migrate the data.

Option 2: Dataverse Web API

The Dataverse web API provides a RESTful web service to interact with data in Microsoft Dataverse using a wide variety of platforms & programming languages, such as C#. This is an excellent option to consider if you’d like to programmatically migrate the schema but need to change the data model in the process.

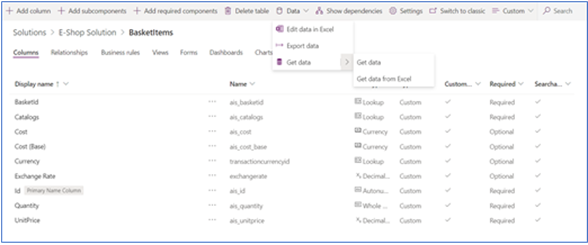

Option 3: Manual

Manually creating tables in the portal is the simplest of the three options but could be time-consuming and error-prone if you make many tables or migrate the same schema into multiple environments. However, given the time constraint of our hackathon and the simple use case, we chose to use this option.

Migrate the Data

Once the tables are in place, Dataverse is ready to receive data from the source system. We evaluated four options to migrate data from the legacy application to Dataverse.

- Import from a CSV file

- Use Power Query

- Use Dataflows

Option 1: Import from CSV

You can load data in Dataverse by importing data from different files like Excel or CSV. This is an excellent option if you need to do a 1-time import of data that does not need to be transformed and does not include any unsupported data types, such as timezones, images, or multi-select choices. We connected to the legacy SQL database using SQL Server Management Studio and exported the data to a CSV during the hackathon. We then completed the import process using the portal to upload the CSV and map the source and destination columns.

Option 2: Power Query

Users can filter, transform, and combine data before loading it into a new or existing Dataverse table. The target data source can be online and on-premises sources, including SQL Server, Salesforce, IBM DB2Access, Excel, or a Web API. Use this option if you are moving a large volume of data or if the data being moved needs to be reshaped during the migration.

Option 3: Dataflows

Dataflows are built upon Power Query, so they have all the same benefits but bring the added advantage of letting users trigger the migration on-demand or automatically on a schedule.

We will be diving deeper into each team, so stay tuned for more blog posts around our AIS Internal Hackathon!

Authored by Jagrati Modi (team lead)

Thank you to the Data team for sharing their experience:

- Jagrati Modi (team lead)

- Souradeep Banerjee

- Nikhil Grover