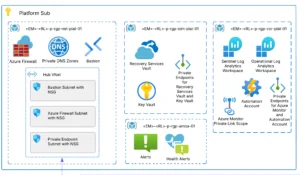

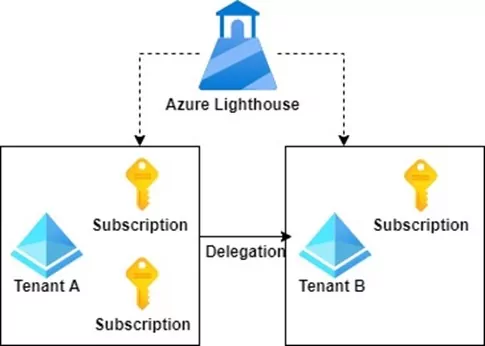

There are times when you must correlate different log sources within a centralized Azure Log Analytics Workspace to have a single management point for leveraging the robust suite of tooling available within Azure that provides visualizations (Dashboards / Workbooks) and action and mitigation capabilities (Alerts / Automation). If you have resources spanning multiple tenants, Azure lighthouse is used to delegate access to these resources to collect these logs.

However, Azure lighthouse has its limitations. One that we recently encountered with a customer was the inability to delegate across the Azure commercial and Azure Government clouds:

“Delegation of subscriptions across a national cloud and the Azure public cloud, or across two separate national clouds, is not supported.”

Cross-tenant management experiences – Azure Lighthouse | Microsoft Docs

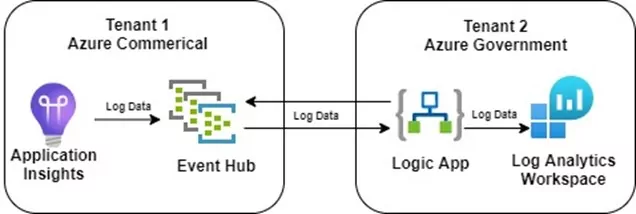

To facilitate log collection, we had to implement a Logic Apps solution to move data from one cloud to another.

Use Case

Application Insight data is hosted in an Azure Commercial tenant and must be transported for storage in a centralized log analytics workspace in an Azure Government tenant.

Solution Overview

The solution is to export the logs to an event hub and use a logic app to pull in data from the event hub to the workspace in the new tenant.

Below is a tutorial on how we were able to accomplish this design. Note: We are porting app insight data to a log analytics workspace in our scenario. But a Log Analytics Workspace to Workspace transfer will also work by using the “Data Export” feature available in the Log Analytics Workspace blade, sending the logs of your choosing to an event hub and following the same subsequent steps outlined in this article.

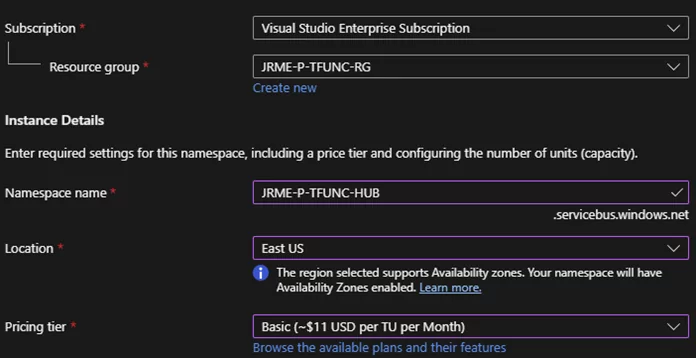

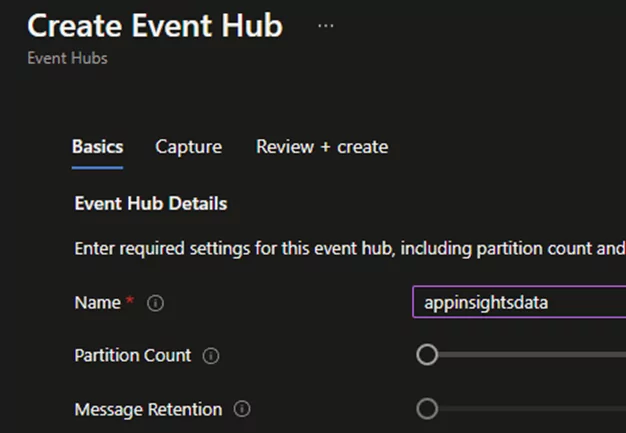

Step One: Create an Event Hub

You will first send the data to an event hub to pull in application insight data from a Logic App in another tenant. You will need to create the hub in the same region as your source of logs. In this example, I used the basic pricing tier.

Once the Azure deployment is finished, you must create an event hub with partition and retention settings. Navigate to your newly created event hub object in Azure, and under the Entities section of the blade, you can create a new event hub. Here I am using the default partition/retention settings.

Once this hub is created, we are ready to send our application insight data.

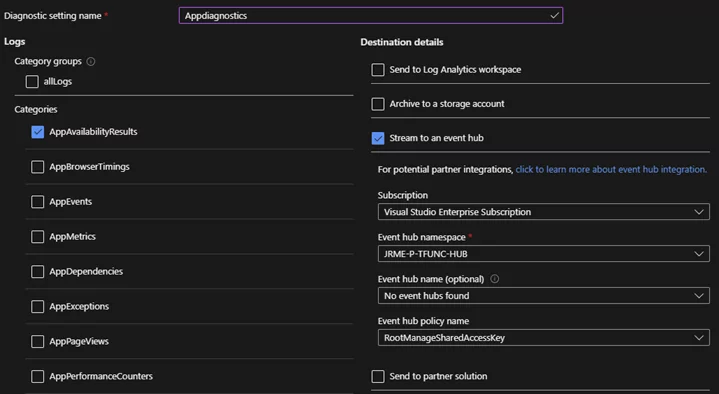

Step Two: Send Application Insight Data to the Event Hub

Once the event hub has been deployed, you can start sending Application Insight data to the hub.

Navigate to your application insight objects, and in the blade, go to “Diagnostic settings.” Here I will create a diagnostic setting – pointing to the event hub. Give the setting a logical name and select the application data you want to send on the left pane. On the right pane, select “Stream to an event hub” and use the event hub namespace we created in the previous step.

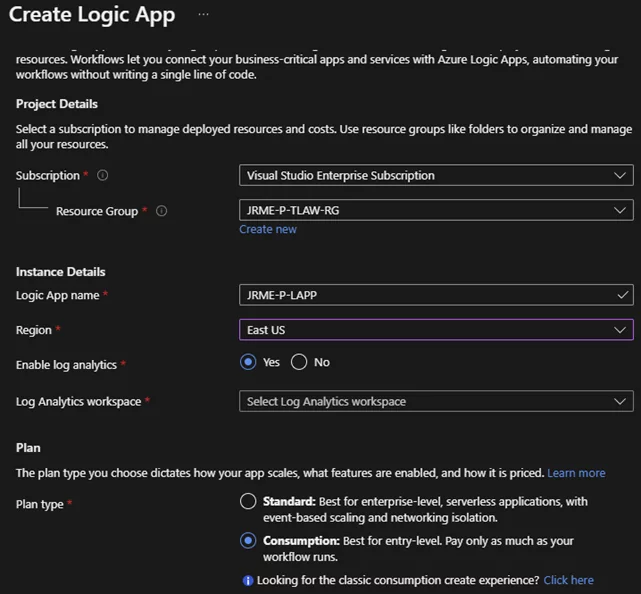

Step Three: Create a Logic App in the New Tenant

Now that app data is being exported into Event Hubs, a Logic App can be created in the new tenant to pull in the data and send it to the central Log Analytics Workspace. In this example, I used the consumption tier.

UNLOCK EXCELLENCE IN MICROSOFT CLOUD

Make the most of your cloud investment with AIS’ proactive and severity-based approach to your cloud infrastructure management, based on your business strategy.

Step Four: Program the Logic App to Retrieve the Event Hub Data

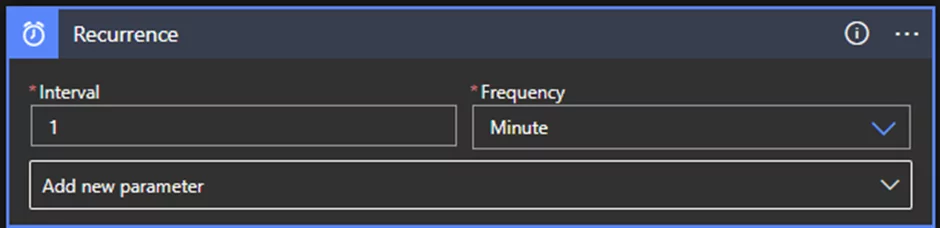

Within our logic app, we want to build out three components within the designer:

- An execution trigger, in our example, is a recurring timer.

- A condition and action: when an event(s) are available in an event hub, parse the data in each message

- For each event message, send the data to Log Analytics Workspace.

For the first step – we will add a simple timer with a 1-minute recurrence frequency.

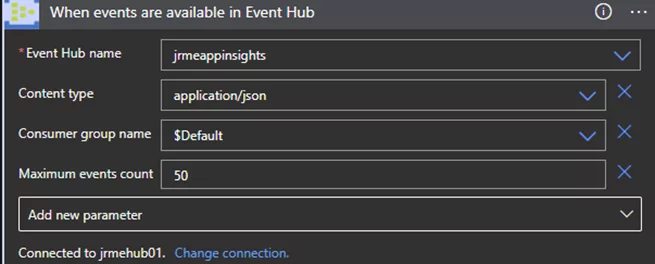

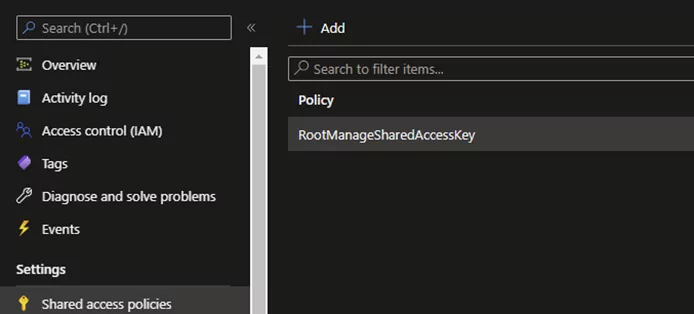

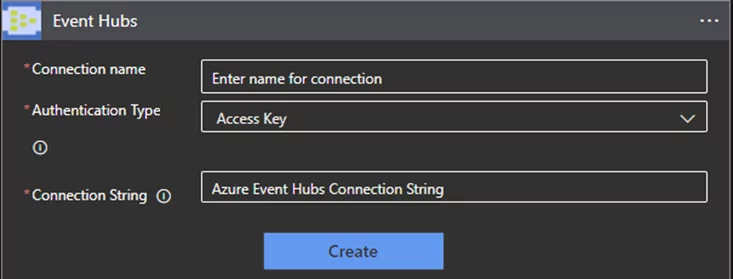

For the second step, I will add an event hub trigger object: “when events are available in the event hub.” For this step, I will need to enter a connection string – this information can be found in the event hub object blade, under “Shared Access Policies.” Selecting the policy object will reveal the authentication keys and connection strings. You can choose the primary or secondary key connection string.

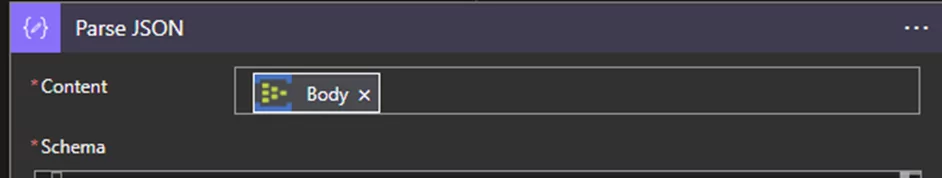

Next, I will need to parse out the event hub data. We will use the Parse JSON option under “data operations” for this. The content will be the body of the event hub.

The JSON data schema will depend on what telemetry your application is sending. You can upload a sample to generate the schema.

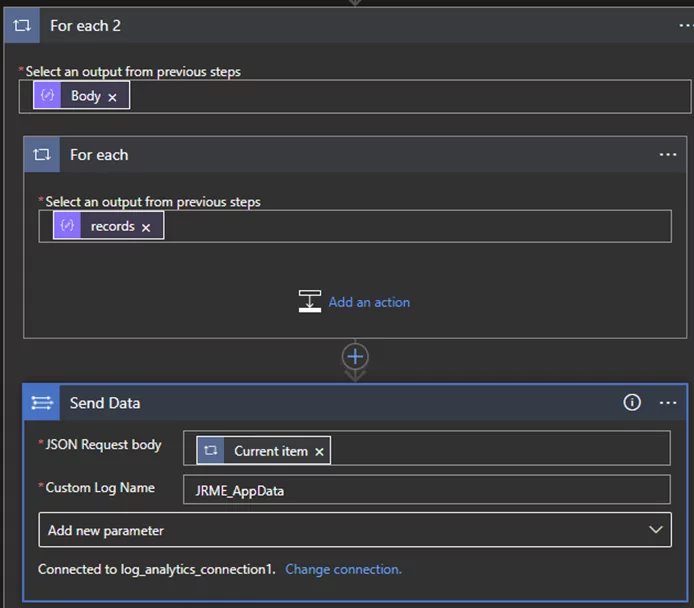

Lastly, I will want to send the logs to the log analytics workspace in the new tenant. For this, I will set up another data operations step where we loop through each event hub message and, within those events, loop through each of the records we previously parsed out and send them to the Log Analytics Workspace using the send data operation. This last step will require a connection to the workspace, which can be found under the “Agents Management” section of the Log Analytics Workspace blade. You will input the workspace ID and one of the two keys – either is fine.

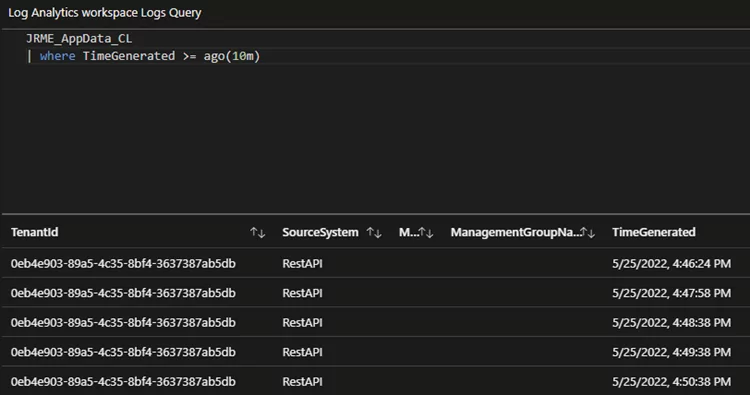

Once set up is complete, we’re ready to save our Logic App and start sending data to the workspace. After a few minutes, you should be able to query the custom log table you configured in the Logic App.

Conclusion

To conclude, there are ways to get data from Azure Commercial to a Log Analytics Workspace within Azure Government. With the help of Event Hub and Logic Apps, we could send data from one tenant to the other and work around the Azure Lighthouse limitations. Hopefully, you will find this helpful when implementing your solution.